An Immerse response

by Joanna Zylinska

What is most interesting about Isaac Asimov’s famous “Three Laws of Robotics,” now frequently evoked in the context of research into AI, is not so much the codification of behavior they prescribe to his narrative creations. What is most interesting, for me at least, is the fact that his “robot ethics” is part of fiction.

Asimov’s Three Laws of Robotics:

A robot may not injure a human being or, through inaction, allow a human being to come to harm.

A robot must obey orders given it by human beings except where such orders would conflict with the First Law.

A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

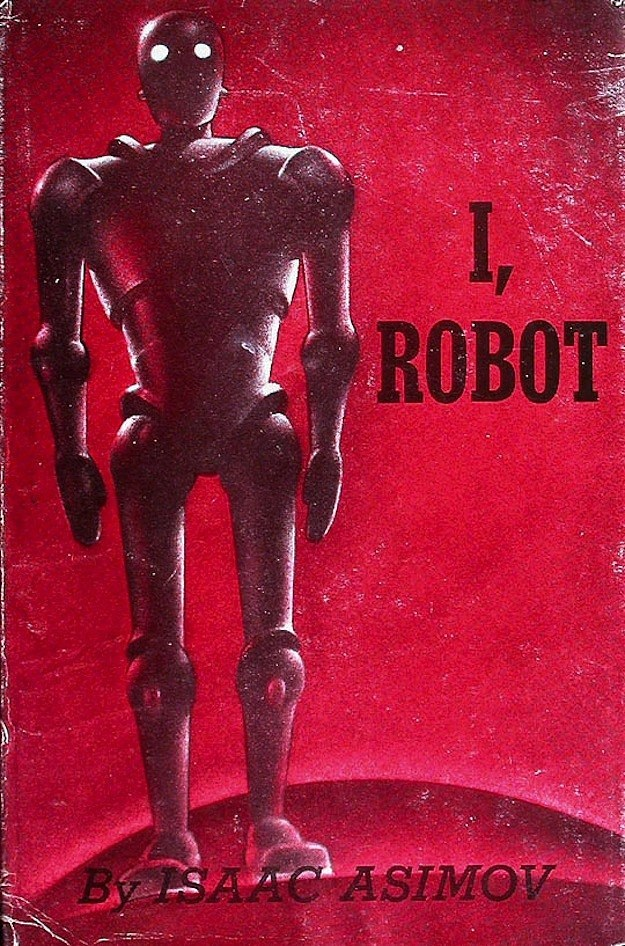

Developed in a number of short stories and published in the 1950 collection, I Robot, Asimov’s ethical precepts are somewhat mechanical, reductive and naively humanist. Like many other forms of deontological (i.e. normative) moral theories, they are an elegantly-designed ethical system that will ultimately fail because glitchy humans will at some point — through stupidity, malice, or sheer curiosity — introduce errors into it.

Yet Asimov was no moral philosopher and so to extricate his laws into a stand-alone moral proposal is to do a disservice to the imagination and creative potential of his stories. What needs commanding instead is Asimov’s very gesture of doing ethics as/in fiction, or even his implicit proposition that ethical deliberation is best served by stories — rather than precepts or commandments.

This is why I want to suggest that the most creative — and most needed — way in which storytellers can use AI is by telling better stories about AI, while also imagining better ways of living with AI.

Reflecting on the nature of this double “better” would be the crux of such storytelling. Mobilizing the tools of imagination, narrative, metaphor, parable, and irony, storytellers can perhaps begin by blowing some much-needed cool air on the heat and hype around AI currently emitting from Silicon Valley.

To propose this is not to embrace a technophobic position or promote a return to narrative forms of yesteryear: detached, enclosed, single-medium based. It is rather to encourage storytellers to use their technical apparatus with a view to exposing the blind spots behind the current AI discourse. Storytelling as a technical activity can thus channel the Greek origins of the term tekhnē, referring as it does both to technical assemblages such as computers, databases, and neural nets and, more crucially perhaps, to the very process of bringing-forth, or creation.

“Intelligence” itself is something of a blind spot in AI, with the field’s foundational concept either being taken for granted without too much interrogation or molded at will and then readjusted, depending on the direction the research has taken. Having moved away from the seemingly impossible desire to develop Artificial General Intelligence modeled on the human brain, researchers have recently repositioned AI as a sophisticated agent of pattern recognition.

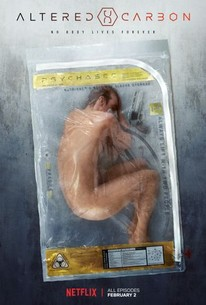

AI today is thus a post-cyberpunk incarnation of William Gibson’s character from his 2003 novel titled precisely Pattern Recognition, in which advertising consultant Cayce Pollard had an almost superhuman ability to make connections and find meanings within the flow of marketing data. In its use of brute force computation, AI is indeed capable of achieving goals that can delight, surprise or even shock humans: from Amazon’s recommendation algorithms through to the autocomplete function on mobile phones, face recognition or even the ability to win at complex board games such as Go. The fuzziness and relative narrowness of the definition of “intelligence” notwithstanding, these achievements have led to some rather bold claims. The boldest of all is the prediction of an imminent era of singularity, in which humans will supposedly merge with algorithms to achieve disembodied (and hopefully immortal) intelligence — although, as the recent Netflix series Altered Carbon shows, eternal salvation may only be available to the very rich.

The resurfacing of the hubristic narratives about human futures spurred on by the latest AI research has been accompanied by the excavation of the myth of the robot (and its cousins, the android and the cyborg) as the human’s other, an intelligent companion who can always turn into an enemy — such as HAL 9000 from Space Odyssey or the killer robotic dog from season 4 of Black Mirror. Popular imagination has thus once again been captured by both salvation narratives and horror stories about “them” taking over “us”: eliminating our jobs, conquering our habitat, and killing us all in the end.

Yet such stories, both in their salutary and horror-driven guises, are premised on a rather unsophisticated model of the human as a self-enclosed non-technological being, involved in eternal battle with tekhnē. However, humans have always been technological, in the sense that we have emerged with technology and through our relationship to it, from flint stones used as tools and weapons to genetic and cultural algorithms.

Instead of pitching the human against the machine, shouldn’t we rather see different forms of human activity as having always been to some extent artificially intelligent? What if, instead of “them” taking over, it is really just us doing it to ourselves? Does it change the story in any way? Does it call for some better questions?

To recognize this shared technological kinship is not to say that all forms of AI are created equal (or equally benign), or that they may not have unforeseen consequences. It is rather to make a plea for interrogating some of the claims spawned by the dominant AI narrative — and by their intellectual and financial backers. This acknowledgement repositions the seemingly eternal narrative of the human’s battle against technology as a politico-ethical injunction. The questions that need to be asked concern the different modes of life that the currently available AI algorithms enable and disable:

- Whose brainchild (and bodychild) is the AI of today?

- Who and what does AI make life better for?

- Who and what can’t it see?

- What are its own blind spots?

Storytellers can help us explore the answers to these questions by looking askew at the current claims and promises about AI, with their celestial as well as apocalyptic undertones — and by retelling the dominant narratives in different genres and different media. Because, as Donna Haraway has poignantly highlighted in Staying with the Trouble, “It matters what knowledges know knowledges. It matters what relations relate relations. It matters what worlds world worlds. It matters what stories tell stories.” Storytelling thus may be the first step on the way to responsible AI.

Joanna Zylinska is Professor of New Media and Communications at Goldsmiths, University of London, an author of seven books on technology, ethics and art, and a photomedia artist. Her latest book is The End of Man: A Feminist Counterapocalypse (University of Minnesota Press, 2018).

Immerse is an initiative of the MIT Open DocLab and The Fledgling Fund. Learn more about our vision for the project here.