Family2Family: First Steps

In November 2018, we presented the prologue of Marrow: I’ve Always Been Jealous of Other People’s Families at IDFA DocLab. The experience centered around the family portrait of one of Marrow’s characters — GAN—and looks at its origin story through memories around a familiar event, a family dinner.

Made by

using our t2i: https://t2i.cvalenzuelab.com/

Made by

using our t2i: https://t2i.cvalenzuelab.com/

Marrow is an interactive research project exploring the possibility of mental illness in the intelligent machines we create, driven by the assumption that if machines have mental capacities they also have the capacity for a mental disorder. I previously wrote in detail why I believe this is a possibility.

Intelligent beings, those with the ability to self-reflect and self-reference, are all shaped by early experiences. The way we design learning environments, develop training conditions and structure our code will determine the machine’s way of thinking. All of these are embedded in the way it will perceive and think. Much as humans carry their mental baggage from birth to death based on their life experience, so will machines. Immense efforts are being invested in making learning models more efficient, accurate and fast to adapt, while meager efforts are being devoted to understanding errors and bugs caused by this approach. In the age of learning machines, the glitch might not be a code problem but a learning problem.

In the age of learning machines, the glitch might not be a code problem but a learning problem.

This project reflects on the machine learning process to question what these automated systems will eventually mimic. Marrow looks at some of the most influential machine learning models through psychological lenses, drawing an emotional set of rules to generate stories that represent the inner working of the algorithms. The goal is to broaden the conversation about how training methods and code structures reflect human limitation, replicate political structures and social vulnerability. It is a way to stop and ask — should we be building these systems at all?

The restriction of knowledge to an elite group destroys the spirit of society and leads to its intellectual impoverishment.— Albert Einstein

Background

Early conversations with Caspar Sonnen, IDFA’s Head of New Media, revealed the DocLab’s yearly theme: Dinner parties. We were inspired to use this as a way to develop a prologue to the experience we’d been ruminating on for two main reasons: 1) The correlation between family and smart technology as systems that shape behaviors, and 2) the analogy between daily rituals like a family dinner and training environments.

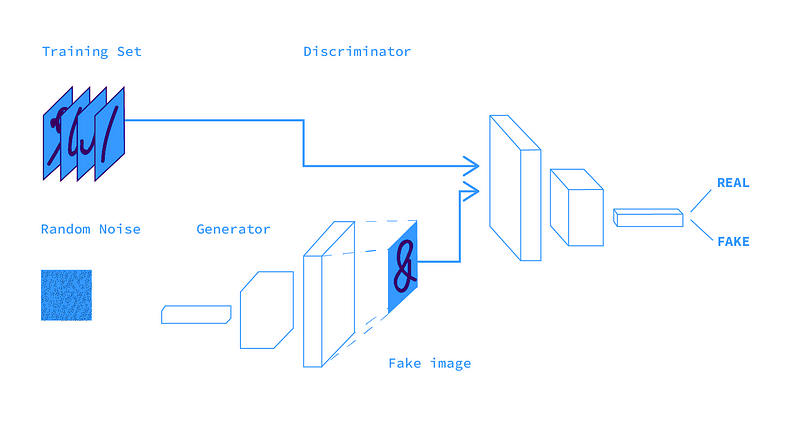

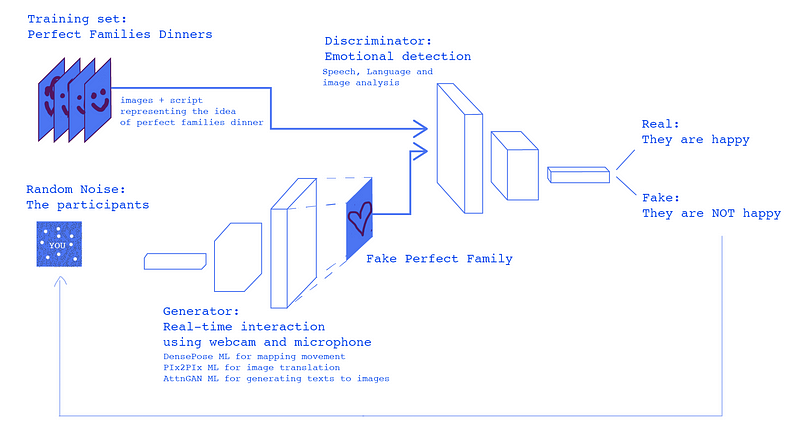

Our focus for the prologue was set on Generative Adversarial Networks (GAN), mainly for the reason that this model generates visual outputs that encourage and support conversations on mental states. GAN is a machine learning model composed of two networks: the discriminator (D), which is trained on data from the physical world, and the generator (G), which is designed to learn this data and generate new content that resembles it.

The

philosophy behind this model is highly influenced by the theoretical physicist Richard Feynman’s

idea that “What I cannot create I do not understand.” If we want to teach common sense we need to

allow the machine to create it.

The

philosophy behind this model is highly influenced by the theoretical physicist Richard Feynman’s

idea that “What I cannot create I do not understand.” If we want to teach common sense we need to

allow the machine to create it.There are inspiring artists who are working and developing with GAN and generating unique and exciting art that pushes the model’s capabilities to its limit. Some that I’ve been following include Mario Klingemann, Robbie Barrat and Refik Anadol.

We were most interested in using GAN to consider the code structure as a mental structure. The two networks, D and G, are designed to battle — one attempts to catch fake images while the other tries to trick and convince the network that they are all real. This inner competition state supports a mental state of doubt, tension and paranoia. A mental structure that is constantly doubting what is real and what is not.

In my last post, I discussed a few ideas about how our code “forces” algorithms to find meaning in data and the Text 2 Image we developed, using AttnGan, in order to visualize this abstract concept and hidden meanings. For the purpose of the prologue, we wanted to implement this in an immersive experience while exploring the concept behind the “real” dataset that trains D to evaluate G and channel the network to the “right” meaning. REAL. RIGHT. Tough words.

GAN’s dataset contains images that provide examples of what our visual world looks like. We refer to these as “samples from the true data distribution”. But what is actually true when defining our visual world? When we explore concrete ideas about the world, such as buildings that reflect architectural movements or fashion trends, this question is much easier to satisfy through a collection of images. But what about ideas of love? Family? Gender? How can we find one common way to represent concepts and feelings that cannot be explored from a binary point of view— that are so subjective and culture-oriented?

The common way to represent an idea is to simulate it, and simulation, by its nature, will never really be the thing itself but a twisting of meaning. We are attaching ourselves to an idea of ourselves, and now we are training life-changing technology to see us through those fake lenses. When the connection between ourselves and the idea of ourselves breaks down the paranoia starts. This felt like the right entry point to this project.

We live in a world where there is more and more information and less and less meaning. ― Jean Baudrillard, Simulacra and Simulation.

Family2Family

If we train a machine learning model with a dataset of a “perfect family dinner” what image will reflect back to us? As a child, I used to fantasize about what it would be like to have a “better family.” Seeing ourselves through fantasies and illusions usually reveals a very distorted image. It is a fruitful ground for a schizophrenic nature, full of hallucinations for what could’ve been and a great paranoia of the lie that exists underneath it.

Screenshot

from a Google search “perfect family dinner.” If there is such a thing as perfect, do we want to

pursue it?

Screenshot

from a Google search “perfect family dinner.” If there is such a thing as perfect, do we want to

pursue it?I’ve Always Been Jealous of Other People’s Families was an experience for four people at a time, invited to gather around a dinner table and act as a family. The table was set with microphone, camera, sound and projections, provoking something familiar yet disturbing. Each “family” was processed through the logic of GAN via different machine learning networks, which were trained on a dataset of “Perfect Family Dinner,” capturing participants through that lens. The output projected is a distortion, revealing an attempt to fill in the gaps between fantasy and reality, hidden space of error and loss.

Marrow @ IDFA

2018. Image by Annegien van Doorn © 2018 NFB.

Marrow @ IDFA

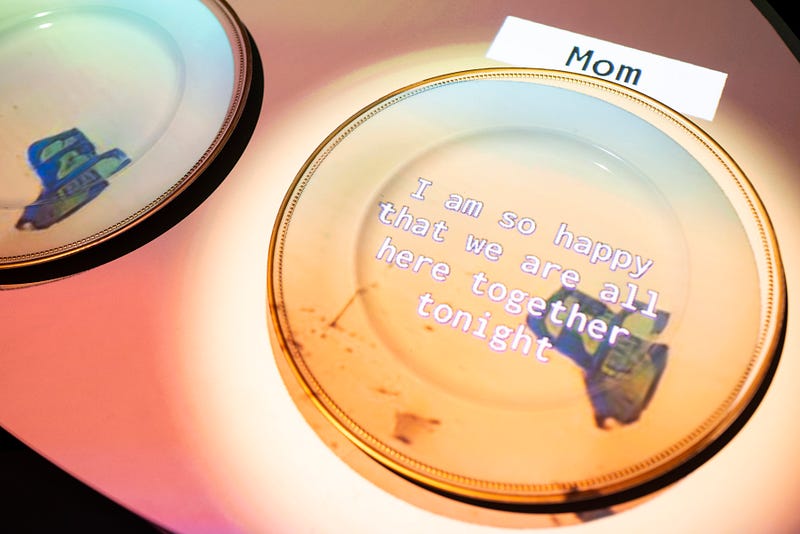

2018. Image by Annegien van Doorn © 2018 NFB.After sitting around the table each participant received a role of one of the family members: Mom, Dad, Brother, and Sister. On the plates, we projected a script that tells fragments of this family’s story—a family that will become familiar in the following versions. We linked our text-to-image development and speech recognition to generate images on the plates. The text was a catalyst for the interaction, like a script of code, where the participants — in order to move the experience forward — were forced to act by it, revealing and affecting the visuals and sounds as the story unfolds.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.It was interesting to see how the familiar setting of a family dinner was able to dissolve the awkwardness of bringing strangers together and supported positive reactions with humor and play. For the speech recognition, we used Google cloud speech to text, as it not only performed faster and more accurately than Watson and Microsoft services in our tests but also showed an impressive result with noise canceling and handling of diverse voices, pitches, and accents. We also scripted multiple versions of our texts and duration engine in order to avoid any unwanted stammers of the speech to text mechanism.

Marrow @

IDFA 2018. Video by Annegien van Doorn © 2018 NFB.

Marrow @

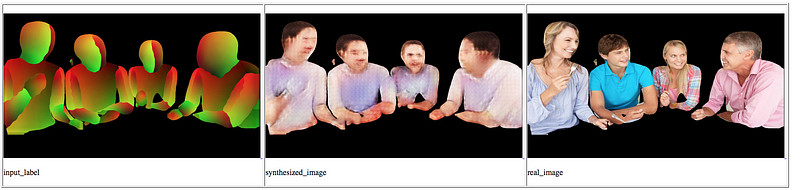

IDFA 2018. Video by Annegien van Doorn © 2018 NFB.In addition, we developed a visual interaction as well. We used DensePose to extract the poses of the perfect families dataset and trained a model called Pix2PixHD, which uses the basic structure of GAN to translate images. This allowed us to generate images from the corresponding poses.

DensePose

to Pix2Pix — training sample — epoch 5 out of 200

DensePose

to Pix2Pix — training sample — epoch 5 out of 200Once Pix2Pix was trained, we could generate a live camera feed through the training dataset, replacing the user with the fantasy one. While the training process takes a long time, the interaction itself can happen in real-time.

Screen

recording of one person via “perfect family dinner” dataset. This shows the DensePose estimations

and the image translation that is being done in real-time.

Screen

recording of one person via “perfect family dinner” dataset. This shows the DensePose estimations

and the image translation that is being done in real-time.The experience aimed to direct the participants to pose and act like a family from the dataset. If the participants played along they would notice how their poses affected their distorted image.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.In this context, the discriminator was trained on a collection of images of perfect family dinners, while the generator aimed to match it to the real-time “families” via scripted and unscripted interactions.

We wanted to create a room that let you, as a user, embody the machine learning process — to use physical conditions to connect and attach people to the machine learning process. We wanted to hack into the logic of the Pix2Pix model and to generate an installation that translates Family2Family: the users’ family to the data family.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.

Marrow @

IDFA 2018. Image by Annegien van Doorn © 2018 NFB.Aiming to find ways to develop an installation that represents a mental state by itself pushed us to explore various machine learning networks with the goal to link them together and let them affect each other through time and space. For example, we explored LIVE_SER, a speech emotion recognition network, to detect if the participants are as happy as the original dataset family. We also explored char-rnn to generate the script, and Posenet to support more automation within the interaction.

The biggest challenge was to try to combine all the components to enrich each other and the experience, without overlapping or colliding. But as the deadline got close, we choose to focus on a more controlled format and ended up not using them, although they were fully developed.

While most of the installation runs in real-time, the script and the character’s voice responses were pre-rendered, and instead, we choose a generative sound approach — connecting the speech and images to audio instruments to generate a uniquely personal experience.

Future iterations

The physical setting and multi-user interaction supported an experience and creative process that takes us from machine learning logic to human direction, reflection and design — and then back to machine learning (which generates real-time output) and once again to the human, as the participants trigger and move things forward. We are inspired by this kind of a sharing process that represents an honest exploration of creative expression and investigation.

We were extremely excited to learn how physical interactions support deep and meaningful learning processes. We wish to anchor the experience as much as we can in the real world in order to communicate this technology — to ground it in the real world where we, as humans, can connect in a real way and see the technology as an extension of the real life rather of a replacement of it. We aim to pursue this direction further while exploring ways to link up with an open-source online platform.

In the fall, MIT Open Documentary Lab and IDFA Doclab announced a research collaboration to interrogate user experience and impact, in which they plan to research Marrow’s user experience and investigate its meaning within art and AI. Next, we will be exploring other models and data structures — each will be developed as a room within the house of this family.

More soon ❤

Credits: An Atlas V and Raycaster production in co-production with National Film Board of Canada, in association with Runway ML and the support of MIT Open Documentary Lab & IDFA DocLab.

Learn more: https://www.nfb.ca/interactive/marrow

- Experience by ~shirin anlen

- Executive producers: Arnaud Colinart and Hugues Sweeney

- Producers: Emma Dessau and Louis-Richard Tremblay

- Technical director: Cristobal Valenzuela

- 3D Design & Development: Laura Juo-Hsin Chen

- Speech Designer: Avner Peled

- Advisor: Ziv Schneider

Immerse is an initiative of the MIT Open DocLab and The Fledgling Fund, and it receives funding from Just Films | Ford Foundation and the MacArthur Foundation. IFP is our fiscal sponsor. Learn more here. We are committed to exploring and showcasing media projects that push the boundaries of media and tackle issues of social justice — and rely on friends like you to sustain ourselves and grow. Join us by making a gift today.