Field Notes: What’s New With VR in the Newsroom?

“In the new year, people will get real about virtual reality,” suggests Ray Soto, Gannett’s director of emerging technologies, in the Nieman Lab’s annual round-up of predictions for journalism.

But what does “getting real” entail? Soto was one of four VR innovators I interviewed at a pair of conferences this fall—the Journalism 360 Immersive Storytelling Festival (which took place at the Online News Association’s annual gathering), and the Double Exposure Investigative Film Festival. See our conversations below to learn how reporters and technologists are putting VR to work.

Laura Hertzfeld, Director, Journalism 360

Tell us about the Journalism 360 project.

Journalism 360 is funded by the Knight Foundation and Google News Lab and run through ONA. We funded a bunch of projects through our grant challenge, which started in March of 2017, and announced the winners in July. Eight of the 11 grantees are here.

The projects ranged from an augmented reality project on facial recognition that identifies bias when you’re reading news stories, to room-scale VR experiences like the one from USA Today on the US border wall. So there’s a huge range in how we’re defining immersive storytelling. Our bigger goal is to build a community around immersive storytellers.

You’ve been editing your Medium blog for about a year. What are some of the surprising things these projects are doing?

I like that we’re defining “immersive” very, very broadly. There are some things shot on Insta360 Nanos that get a little narration and graphics. Euronews is doing a project on the Svalbard seed vault, about the future of the environment. It’s all 360 video.

Then you have the really crazy tech stuff that Emblematic and FRONTLINE are doing with avatars of humans, which brings up a lot of ethical questions. We have Robert Hernandez from USC training the next generation of journalists; USC is one of only a handful of programs doing this work…

What are the big questions journalists have when they want to start doing this?

We have a lot of “how to” info on our Medium blog. What cameras should I use? How much does it cost?

On the panel yesterday, Raney Aronson from FRONTLINE said there isn’t really a price-point yet for these big projects because we just haven’t done enough of them. A lot of companies that work with Hollywood and with gaming studios are interested in working with news organizations because they want to do this stuff. It’s still very expensive to do big projects but relatively cheap to go out with a 360 camera and do a trial-and-error experiment.

Anything else Immerse readers should know?

I’m probably more interested in hearing from them! We do monthly Google Hangouts on postproduction, gear, and projects we think are cool. I’m really interested in film festivals and the overlap between the art and documentary world.

Ray Soto, Director of Emerging Technology, Gannett

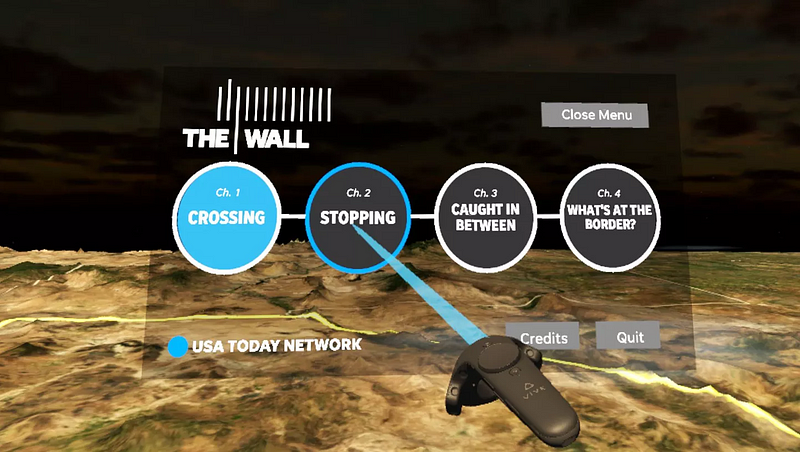

Tell us about USA Today’s multimedia production about the border wall.

It’s a network feature story we worked on for over 3 months with several colleagues from the Arizona Republic and other news outlets near the border.

Our goal, particularly with the VR side, was to create an experience that relates to different topics along the border: human smuggling, drug trafficking, impacts on the communities and the environment.

We wanted to leverage LIDAR and photogrammetry to recreate the terrain, to create on-the-ground experiences that really help folks feel that sense of presence. We pulled lots of technologies into this and what we ended up learning fairly quickly is that the production process was more complex that we expected. But once we had our environment, we realized we had something pretty special and were on the right track.

I know about photogrammetry but what is LIDAR?

LIDAR is light radar detection. We partnered with a company called Aerial Filmworks, which had LIDAR catcher technology within a helicopter. Think about a laser ping capturing the topography. LIDAR creates a point cloud system that can help you visualize the undulation of the terrain. But LIDAR wasn’t necessarily giving us what we needed for a VR on-the-ground experience. When you take that point cloud system, you render it as a 3D object, and there was a lot of manual work needed to make it look good. So we ended up relying more on photogrammetry; that worked out much better than LIDAR.

How do you do photogrammetry on a giant mountain?

The process was fairly straight-forward. I’ll use Big Bend as an example: the portion that we had rendered out was about 1200 photographs at around 100 megapixels each. That’s 16.8 gigabytes worth of data. Within each image, the metadata for geolocation was incorporated. So you pull all of those into a program, set it out, and it shows you the flight path and renders out a 3D object. That’s essentially the process, but then there’s optimization with 3D software: getting it to render and look halfway decent.

So I’m the user and I’m looking at something like a 3D Google map. I’m looking from above and see an outline of the proposed border wall and then I zoom into the landscape to get to the stories. Is that how it works?

We were working with so many different journalists we wanted to be able to pull in as much content as we could in order to be able to have a narrative arc across these different locations along the border — ultimately, across four different chapters. So when you first get in we set the tone with strong narrative. Explore, see for yourself, and learn. Our goal was to educate.

I see people using the Vive to walk around the animation. Can they also use Google Cardboard?

For this particular project, not yet. We build this exclusively for the Vive to have that room-scale experience. It doesn’t perform very well on mobile devices.

This sounds like a big, expensive project. What is the projected life span?

We’re thinking about this as an evergreen project. We identified stories that we feel are strong enough to stand on their own for a while. Those stories won’t change: the impact on the environment, the drug smuggling aspects. If there is an opportunity, we’ll revisit it. But the production process took about three months. Two months was just exploring how to build the terrain, LIDAR, and photogrammetry. Once we figured out that process we built this within two weeks.

So what do you think: Should they build the wall?

It’s not for me to say. What we were hoping to do with this project is help folks understand that this is a more complex issue than whether a wall should be built or not. There are already some fences and walls built along the path but not as many as you may think. There are three different terrain types, which really complicates things. Big Ben is essentially a massive canyon: You’ll never be able to build a wall there… Anyway, I’d encourage audiences to come by and experience this work for themselves. We’d love feedback.

Ben Kreimer, independent journalism technologist

So, tell me about the project I just experienced.

You saw Making Waves: Women and Sea Power on the HTC Vive. It’s the story of a group of women seaweed farmers in Zanzibar who are innovating in the way they are growing seaweed, planting in deeper waters. Due to sea temperature rising they can no longer grow seaweed in shallow waters; seaweed farming is their primary source of income.

So what were you hoping to do by filming these women in 360 video?

Take you to Zanzibar to see the places where they work and live, to see the work that they are doing underwater.

You had to film on land and underwater. What were some of your technical challenges?

Underwater brings up a whole host of challenges. Cameras fog up. You’ve got refraction, and so your lens field-of-view is less than it is on land. We used a 3D-printed mount from Shapeways, using 3 Kodak SP360 4K cameras. On land, shooting is pretty straight-forward. For the aerial shots I have a custom DJI Mavic Pro 360.

When I was on land, I couldn’t see the camera. But you had a diver with a camera next to me underwater. Is it harder to “erase” the camera underwater? Or was there another reason why you left the diver in?

For the underwater shots, we wanted to leave the diver in because the story was partly about the relationship between the humans and the ocean. It was part of the shot. We never wanted or even considered removing the diver, verses the other shots, with just the tripod on land.

The moment that struck me most was when I was surrounded by the women laughing. What was your favorite moment?

I’d probably go with the drone shots. I’ve been working with drones for about 5 years. When I was out flying over the seaweed, it was really striking. That aerial perspective is always a little bit magical. But I also like the scuba diver going through the small cave, with the cloud of fish. THAT is a 360 shot. That is what 360 was meant to capture.

Debra Anderson, co-founder and CEO, Datavized

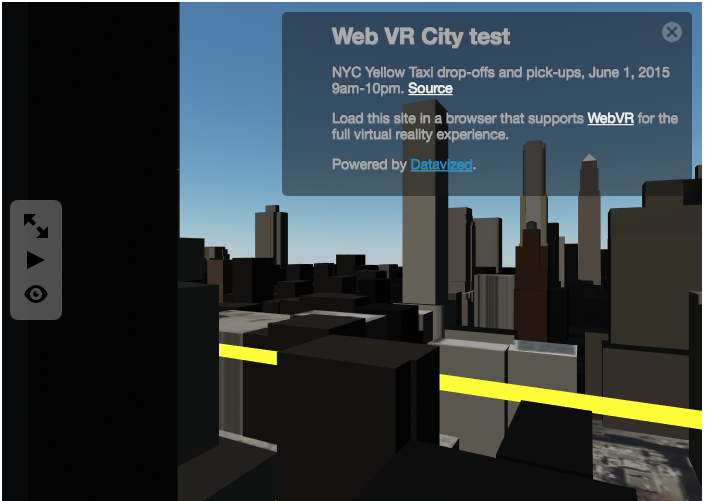

Okay, give me your pitch. What is Datavized?

Datavized is a New York-based web VR software company. We’re building tools to enable 3D, interactive data visualization. We’re focusing on geospatial applications because we found, via prototyping, that bringing, say, a 3D bar graph into VR is not in itself compelling. This is a new medium, so we need to start from scratch.

The question we often get, even from VR enthusiasts, is, “Why VR?” An environment with street-level view in VR really makes sense. Whether you are using teleporting controllers or a browser in a 2D space, you can have full interactivity. What we’re building is hybrid software that enables the view of 3D interactive content on the web while also providing capabilities for an immersive experience. If the user has a headset, the immersive view is enabled.

We’re testing around this hypothesis: Is this technology suited for providing an objective view of data as well as a subjective experience? We believe it is. Data is meaningless without context. Without context, it’s just raw stuff. So we’re building tools that will enable new kinds of mapping.

We’re taking CSV spreadsheets and mapping data into these environments… We’re building 3D Earth, the ability to have country templates and city templates that you can search by zip code. We’re envisioning the start of a platform. We see web VR being transformative. You don’t need headsets to access it. We’re looking to get tools into the hands of journalists.

So, for the uninitiated, “WebVR” means that I can view the work on my browser and interact with it?

Yes, you are viewing a web page. Because it’s VR you have a fully interactive 3D model. If you’re on your tablet you might be moving around. If you’re on your phone you might be in magic window mode. And if you’re on controllers you may be teleporting in that environment, as if walking through a web page.

What kind of stories can you tell within the platform that you’re building?

The stories span anywhere from climate change to housing to urban planning. We’re providing local context, with citizen journalism. We’re integrating a lot of open data as well, so we see these experiences as an extension of our digital lives. Texting a VR experience, messaging it, embedding it in a blog post….

We were talking before about how you’re kind of building a “data sandwich.” It has different levels, like Google Earth, where people can zoom into different levels of specificity. Could you talk about those levels?

We built a 3D Earth using NASA satellite imagery. We have a prototype where you can click on a country and view things like life expectancy or GDP. We’re doing something like this for the UN. You can view air pollution by country, and then dive into more detail in the data sandwich. You can go from satellite images to terrain data. As you zoom in, if you’ve been in Google Earth VR, it’s a similar context with a subjective view of terrain data and mapping political boundaries. When full-throttle in the data sandwich, you’re going into city-scale. With city views, you can fly over cities or zoom into that environment.

Part of what you’re tapping into is open-source data available online. Are you worried that those sources will dry up at some point?

We want to look at private databases, not just open data. We’re starting with the geospatial data but that can evolve. It will take experimentation, research and development. In terms of building the layers of earth and city, we’re needing to customize the product. For open data we’re just at the tip of the iceberg in developing the product. We are talking with Google News Lab, Enigma and other partners. We really want to get these open data products to be useful for search. There’s a plethora of data. That’s not the problem. The challenge is giving it context.

Immerse is an initiative of Tribeca Film Institute, MIT Open DocLab and The Fledgling Fund. Learn more about our vision for the project here.