The Promise and Dread of Digitising Humans

In 2013, actor Paul Walker died in a car-crash halfway through the filming of Furious 7. Universal was left with a tough decision: stop the production of a hugely profitable movie franchise — or find a way to carry on. Could they recreate Paul’s face digitally?

They approached Hao Li, a computer scientist, currently a professor at the University of Southern California and CEO of Pinscreen, a startup focused on creating 3D avatars. He cut his teeth at Industrial Light and Magic devising new real-time facial capture approaches to CGI. Working with Hao and their in-house visual effects experts, they were able to continue filming, using Walker’s brothers as stand-ins. They were physically similar, so they digitally recreated Paul’s face and seamlessly matched it onto those of his brothers in post.

How 'Furious 7' Brought the Late Paul Walker Back to Life A version of this story first appeared in the Dec. 18 issue of The Hollywood Reporter magazine. To receive the…www.hollywoodreporter.com

It worked like a treat and went on to become the sixth highest-grossing film of all time.

Hao came out of that with a new Hollywood-focused objective that he wanted to explore in his research at USC: How do you speed and scale the creation of digital humans?

His approach was to use deep learning, applied to computer graphics, using 3D scans and photographs as the training data. “One of the aims was: How can we make movies look better?” Hao says. “But as time progressed, it became clear that there would be more interesting applications in the emerging worlds of virtual and augmented reality, which need 3D models for immersive storytelling, communication, and social media.” Hao and his colleagues at Pinscreen started working on photoreal human beings and scaling these technologies to smartphones.

“Now we can do things automatically, using algorithms, that it used to take entire VFX [visual effects] departments to do. What in the past decade would have taken up too much computer processing, you can now do on your mobile phone, and development is happening at an exponential rate.”

As Facebook and iPhone collect more data, artificial intelligence experts started to develop deep neural networks to learn how to do complicated things such as turning an old photograph of a person into a compelling 3D model. Given enough data (in this case a vast supply of photos), processing power and time, you can write a program inspired by the way the human brain is structured that will learn to accurately identify from a 2D picture, what that face would look like from different angles, and then to build that model.

I instinctively love the idea that we can create 3D versions of historical figures since it opens up a world of opportunity to engage audiences in history, science, and archaeology in new and exciting ways.

Some years ago there was a debate in the documentary industry about whether colourising black-and-white film was acceptable, since that was modifying the source and to do that would be to challenge the very nature of what constitutes a documentary. Increased ratings meant the colourisers won that argument. Nonetheless, it’s still controversial enough that Peter Jackson hit the headlines when he used Hollywood techniques developed in Lord of the Rings to create 2D and 3D colourised renditions of World War I from archive sources. He justified this approach in a January 18 interview in BBC’s Today show: “They didn’t fight the war in black and white…. It gives a true reflection of what the soldiers saw.”

Peter Jackson making World War One film Director Peter Jackson is creating a new 3D film, using archive footage captured during World War One. The images are…www.bbc.co.uk

Who owns our depictions?

But if this gives us pause for thought, fully digitising humans places us at a more significant juncture. Once you scan something into a computer, it becomes a digital asset that you can manipulate in all sorts of ways. But what does it mean if that source is or was a human being?

I wanted to know what a documentary creator who has worked with digitised humans thought. Gabo Arora has worked with a Holocaust survivor in The Last Goodbye, where the need for authenticity is paramount, given the claim of certain fringe groups that the Holocaust never happened.

The Incredible, Urgent Power of Remembering the Holocaust in VR Pinchas Gutter has returned to Majdanek at least a dozen times, but this trip is his final one to the onetime Nazi…www.wired.com

“When the second wave of VR first happened, and we started to make films for UNICEF using 360 video, you would hear people saying, ‘You can just put the camera there and show people what it’s like.’ But I never really believed that,” says Arora. “I do recognise that for future generations, there is a need for testimony to provide first-person accounts — I was there, that happened to me — and now we have the ability to take you there, to meet that person. I wanted to put this into practice with The Shoah Foundation, to make The Last Goodbye, to go back to a concentration camp with a Holocaust survivor who can tell us what happened to him and his family there. But would we make something that people might say: ‘You manipulated that?’ ”

The Last Goodbye is a room-scale VR documentary in which you visit the Majdanek concentration camp with Holocaust survivor Pinchas Gutter, who becomes your guide, telling you what happened to him and his family there. Pinchas is 3D, created using volumetric capture, a process for digitising humans using an array of cameras capturing his interview in high resolution and rendering him as a digital asset that can be imported into VR. You are able to visit areas of the camp with him via photogrammetry — thousands of photographs are taken of the camp and these are stitched together to create a photorealistic 3D environment that you can walk around in. Surely, documentary has never been so real.

“At first I suggested that we do the volumetric capture of Pinchas in Toronto, his home-town. But the Shoah Foundation said no. It was of extreme importance to them that it happened there at Majdanek and that this was verified and certified and kept on record, in order to remain as true-to-life as possible. But, nonetheless, we were making a documentary and with that goes some artifice: we painted out certain lights, museum signs and sounds; we colour-corrected to hide some of the ugliness of photogrammetry; we used fades and music,” Arora says.

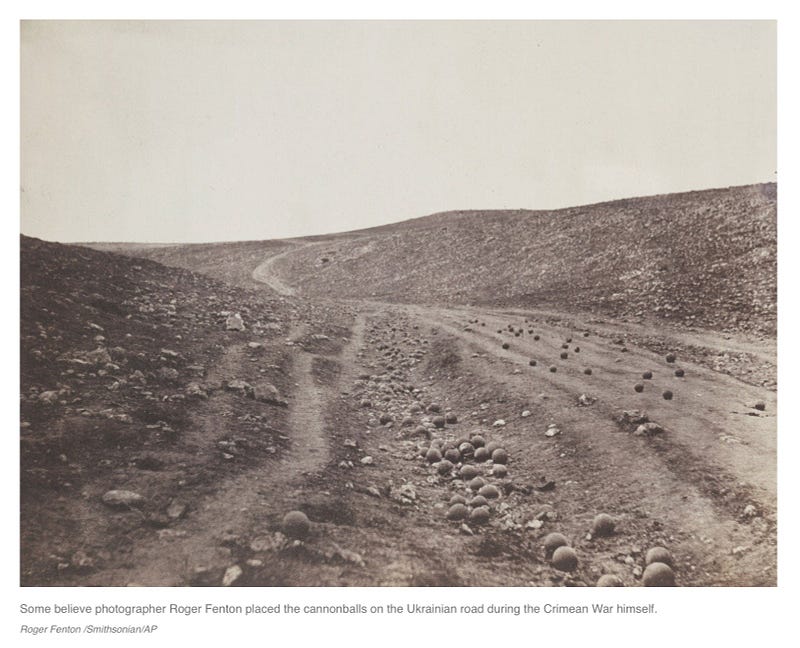

“Photos lied from the outset,” he observes. “‘Errol Morris explores this brilliantly.’” In an interview on NPR’s All Things Considered, Morris reflects on two photos of the Crimean War taken by photojournalist Roger Fenton at the same spot. One had considerably more cannonballs in the road than the other.

Morris questions whether the original photo had been manipulated to seem more dramatic. “We all know that staging is that big no-no in photography. I would call it a fantasy that we can create some photographic truth by not moving anything, not touching anything, not interacting with the scene that we’re photographing in any way.”

Errol Morris Looks For Truth Outside Photographs In his new book about photography, Believing Is Seeing, documentary filmmaker Errol Morris talks about what you don't…www.npr.org

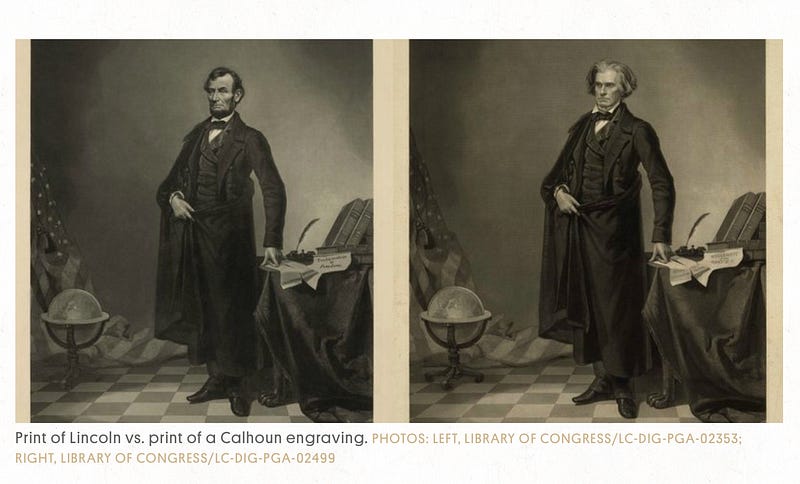

One hundred and fifty years after Abraham Lincoln became the first president of the United States to be photographed, it was discovered that his head had been transposed in his most famous portrait.

http://www.alteredimagesbdc.org/lincoln

http://www.alteredimagesbdc.org/lincoln

“What we need most is critical thinking,” says Arora. “Susan Sontag said, ‘It’s passivity that dulls feeling.’ In immersive media we can create work that is anything but passive. Without imagination there is no empathy. What can we build with art and storytelling? The First World War was brought home through photography, the Vietnam War through TV, Syria through YouTube and Black Lives Matter through Facebook Live. Will the next conflict be covered in VR?”

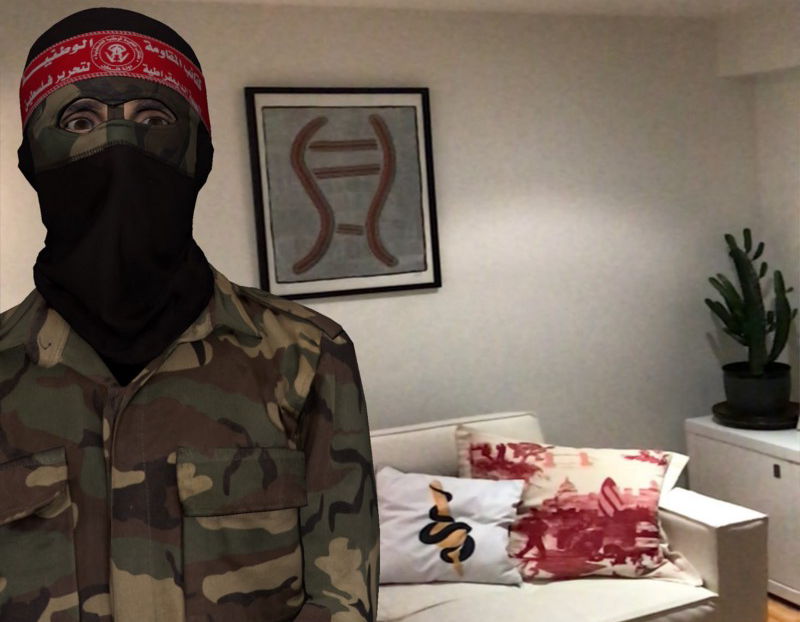

The Montreal agency Dpt. has also been bringing conflict home through digitising humans in their work on Karim Ben Khelifa’s The Enemy. They have created an augmented reality application that allows me to introduce people from opposing sides of intractable conflicts into my living room (iOS and Android versions of which are available for free wherever you get your apps). I fire it up and Gilad, a soldier from the Israeli Defense Force is standing in my lounge. He is 3D and the same size as me and seems to follow me with his eyes. As I walk towards him I hear him talking about who he thinks the enemy is. I walk around behind him and see Abu Khaled, a Palestinian fighter, on the other side of the room.

As I also hear from him I realise that they are both saying more or less the same things. “There is something very powerful about inviting these people into your personal space,” says Nicolas Roy, Creative Director of Dpt. “It’s literally bringing this conflict home to you and that was very important to us. There is something unique about AR in that it writes itself into your environment. Long after the experience you still remember these people as having inhabited that space — their presence lingers there. It is subject to abuse, though — you could place them somewhere completely inappropriate and take a photo of that. As far as I know, no one has done that. But there is nothing we could do to prevent it.”

New ethical quandaries

Meanwhile, Hao’s research has been coming on in leaps and bounds. He found out how to get the neural network to look at footage of people talking and use 3D tracking to understand their facial expressions. “When I map that onto my face I can drive their facial expressions. You composite it and I become them.”

He tells me about the voice-driven facial manipulation technology pioneered by Supasorn Suwajanakorn at the University of Washington. A neural network analyses hours of video footage of someone speaking (e.g. Obama) in order to match audio input with mouth-shape and texture, ultimately allowing you to literally put words into someone else’s mouth.

“You can go home and talk to your dead mother—because the technology exists for that,” Hao explains, leaving me feeling decidedly queasy. Is that honouring the dead? Right now I would instinctively say no — but, who knows, maybe in ten years that will be the new normal.

It occurs to me that this could be quite liberating in the use of on-air talent. A TV presenter can be scanned to become a digital asset, then you can use the same person in multiple languages. Actually you can use them an infinite number of times — you won’t need the actual person any more. We can write a tight contract, grab them while they are young, scan them once and have them for life — and longer.

More significantly, you put all of this together and you can make living and historical figures into puppets, making them say things they never said, and it would look real.

Hao recognises some of the ethical problems this raises. “The downside is that I can generate a photorealistic digital Trump— indistinguishable from the real thing—on my iPhone, and get him to declare nuclear war on North Korea.”

While this is still a little rough around the edges, the tech is developing at super-high speed — very soon it will look perfect.

“The thing is to get it out there and educate people — show them what’s possible — so that we can plan for it and create a safer environment,” says Hao. “Having a system and a framework will be the best way. Maybe we need to develop a blockchain mechanism for verification.”

Nonny de la Peña is a journalist who has pioneered the use of digital humans in her genre-defining work. She sees the problem as wider and more fundamental: a breakdown of trust between the public and major institutions, including the press. “We need to rebuild trust to debunk false news. There have been institutional failings. It’s time to set a new global standard.” She has joined the Knight Foundation’s new commission on Trust, Media and Democracy to help define the future of journalism in the era of fakery.

Knight Foundation announces major Trust, Media and Democracy initiative to build a stronger future… MIAMI - Sept. 25, 2017 - The John S. and James L. Knight Foundation today announced a major initiative to support the…knightfoundation.org

This is a great start. We urgently need sophisticated tools to authenticate what is trustworthy. Currently the tech that allows fakery is ten steps ahead of any tech that can detect it. In the wrong hands things can get out of control very quickly. And we know from bitter experience that there are very sophisticated agents, from corporations to countries, looking for any means to manipulate us.

The opportunities opening up for documentary creators to use this technology are very exciting, and the step-change could be as big as from radio to TV. But they steer us steadily into a less authentic universe and a world that we will struggle to recognise. Once a human has become a digital asset, you can easily manipulate them. We already think we have a problem with fake news. How will we contend with fake history?

We need a new definition of human value in media and a new definition of what a documentary is. We are being presented with one of the greatest challenges to journalism and documentary that we have yet faced: How can we redefine our craft when we can no longer tell truth from fiction?

Read the response

The Uncertain Nature of the Digital Truth by Carles Sora

Immerse is an initiative of Tribeca Film Institute, MIT Open DocLab and The Fledgling Fund. Learn more about our vision for the project here.