An Immerse response

In Jessica Clark’s piece on recent developments in ‘haptic storytelling,’ she performs a valuable service by chronicling her experiences with a range of new devices used to add tactile sensations to virtual reality. Such accounts are especially important right now precisely because these experiences remain largely inaccessible to the bulk of VR users: devices like the HaptX glove and rigs like the one Clark tried out in the HERO installation are still very much in the demonstration stage — and we don’t yet know if they’ll ever make it much beyond that.

Even if they do, we don’t know if they’ll take the further step of migrating into the home. At the moment, the haptics industry (including Ultrahaptics touchless haptic feedback and the Feel Three VR motion simulator) seems to be trending away from domestication — that is, bringing more robust haptics applications into the home — and toward location- or site-based encounters. The shift away from domestication solves the ever-present challenge of scaling down complex interfaces so that they can target the consumer market. Devices don’t have to be $200-$300 in order to have viable entertainment applications. It means, however, that such experiences will be confined to arcades, galleries, and other spaces of public entertainment.

This doesn’t necessarily have to be a problem. But if we’re thinking about touch’s centrality to haptic storytelling, it might be: right now, authoring content for VR continues to operate according to established visualist logics of storytelling, with touch sensations just sort of added onto existing audiovisual content. In spite of claims that getting haptics right is essential to the long-term success of virtual reality, VR remains largely dominated by appeals to the visual, to such an extent that Ken Hillis’s observation way back in 1999 still applies: “touch or tactility in a VE [virtual environment] remains a very visual tactility.” Touch feedback exists merely to offer conformation of the image: “one orients oneself visually, and, as with sound, touch is made proof of what has first been seen.”

While VR seems to offer a sort of killer application for haptics, and haptics promises to provide a vital missing sensory dimension for VR, haptics remains additive rather than transformative. Haptic realism means simply, ‘Does the tactile sensation conform to the image depicted on the screen?’ We remain very far from the vision that Margaret Minsky offered back in 1995 of a “World Haptics Library” that would be used to inform the design of computerized touch sensations.

Margaret

Minsky, “Computational haptics : the Sandpaper system for synthesizing texture for a force-feedback

display” (1995)

Margaret

Minsky, “Computational haptics : the Sandpaper system for synthesizing texture for a force-feedback

display” (1995)Immersion Corporation, by adding vibration sensations to touchscreen videos, has creatively haptified some content (including Arby’s ads, a Homeland trailer, and a Pharrel video; download their Android app to get the feels), but we’re still very much in the logic of Aldous Huxley’s feelies and Salvador Dali’s Tactile Cinema — both imagined nearly a century ago — where touch content gets added onto cinematic images, instead of upending or countering the logic of visual realism.

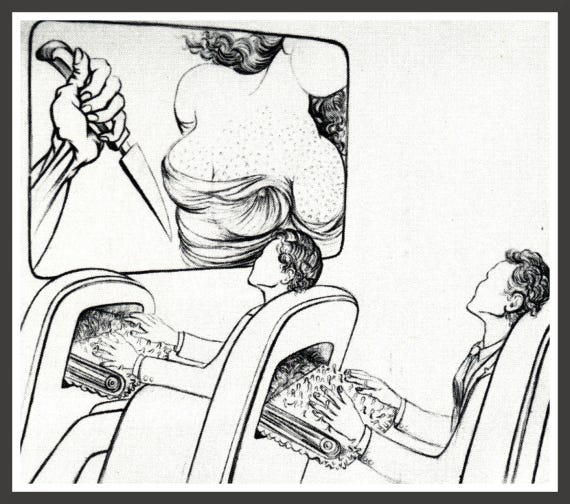

Salvador

Dali, Le cinéma tactile (1930)

Salvador

Dali, Le cinéma tactile (1930)What would it take to flip the sensory script so that vision becomes the subordinate sense and touch takes its place as the dominant one? What new stories could we tell if we focused first on authoring content for touch, and then layered images and sounds on top of a haptic foundation? The stakes here are aesthetic, concerning the sensory qualities of virtual worlds and computer-generated environments. But they are also epistemic, as the rise of this new mode of haptic storytelling could disrupt established sensory hierarchies operating not just in virtual reality, but in media more generally. It is a question of how we use media to make sense of the world beyond our immediate physical surroundings — and how we experience the things and people brought closer to us by being translated into electrical pulses communicated through digital networks.

To Touch Impossible Things

Clark’s account of her experience with HaptX’s VR , a wondrously complex device that uses microfluidics to simulating textures and temperature, highlights what this touch-centered content might look like. She recounts with delight feeling a deer prance around on her palm, before a virtual dragon appeared and “fried it to a crisp,” accompanied by an intense sensation of heat on her hand. This drive to use haptic interfaces to create new and unprecedented types of touch experience goes back to the earliest days of research into computer haptics, when researchers used touch feedback to represent microscale forces, like the pull of the bonds between molecules. A touch-centered haptic storytelling could start from the ground of these new types of tactile encounters and work up from there, with touch, rather than vision, serving as the anchor for content creation.

The HaptX developer kit debuted this

week at the Future of Storytelling Summit in NYC

The HaptX developer kit debuted this

week at the Future of Storytelling Summit in NYCThis circles us back to the question of domestication, which directly relates to the content problem. Popular press treatments of haptics since the 1990s have focused almost exclusively on the hardware side of tech — as if haptics will instantly take off once someone invents the perfect device. It’s an understandable impulse: New haptics hardware holds a perpetually seductive and exciting promise. But if it doesn’t drive the creation of compelling haptocentric content, its potential will remain suspended in this unrealized state.

Haptic storytelling — creating this compelling content — also involves providing people with the technical and conceptual tools to author meaningful content for touch. It entails demystifying haptic hardware — opening up the black box of haptics — to see how the systems controlling the production of artificial touch sensations operate. Like other forms of media literacy, haptic storytelling will require competence in both reading and writing for touch. And if more robust forms of haptic devices remain prohibitively expensive, with their use cases limited to site-based entertainment and industrial applications, then literacy in authoring content for touch will remain the province of engineers trained at elite institutions, working in corporate settings with substantial research and development budgets, able to afford costly license fees and development kits.

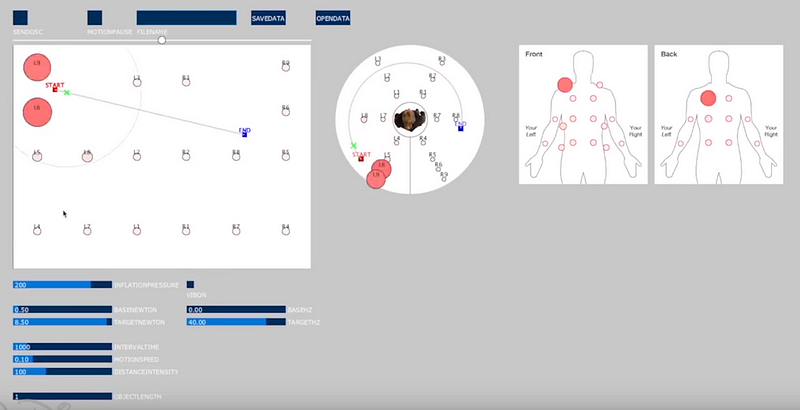

Haptic

effects editor for Disney Research’s Force Jacket (2015)

Haptic

effects editor for Disney Research’s Force Jacket (2015)There’s enough activity in the field right now to suggest that the future very well might be haptic. We might be headed to an exciting and disruptive moment where these new touch technologies help undo vision’s purported dominance, rebalancing our sensory ecology in the process. There is no shortage of hardworking and creative people in the field of haptics right now, both on the industry side and on the university side, and the latest generation of VR has lead to investment dollars being funneled into what had been a field characterized more often by commercial failure than success.

But even with the infusion of capital and talent into the industry, the proliferation of increasingly robust and immersive haptic devices is no sure thing. Two weeks ago, Road to VR reported that the company responsible for the Hardlight VR suit would be shutting down due to a lack of funding. The company cited slow growth in the VR industry more generally in explaining their struggles. As has often been the case in the history of technology, a good piece of hardware on its own isn’t enough without compelling use cases and a stable of appealing content to drive adoption. The future of haptic storytelling depends more on the stories we tell through touch than it does on the hardware we use to tell them.

David Parisi is an Associate Professor of Emerging Media at the College of Charleston. His book Archaeologies of Touch: Interfacing with Haptics from Electricity to Computing examines the origins, implications, and possible future trajectories of haptic human-computer interfaces.

Immerse is an initiative of the MIT Open DocLab and The Fledgling Fund, and it receives funding from Just Films | Ford Foundation and the MacArthur Foundation. IFP is our fiscal sponsor. Learn more about our project here.