New Frontiers for Online Persuasion — and the Role of the Documentary Storyteller

By Ben Moskowitz

In a chapter written in early 2016 for i-Docs: the Evolving Practices of Interactive Documentary I argued that the web’s greatest contribution to documentary will be the ability to “personalize” stories to individual viewers.

Events in the past year have demonstrated the power of this kind of data-driven persuasion, suggesting that storytellers working in the public interest will have no choice if they want to keep up.

The day after Donald Trump shocked the world with an unexpected victory in the US presidential campaign, a little-known company in London published a press release, proclaiming: “We are thrilled that our revolutionary approach to data-driven communication has played such an integral part in President-elect Trump’s extraordinary win.”

The company was Cambridge Analytica, which had been quietly touting itself as a pioneer of “psychographic targeting.”

It’s an open question as to whether Cambridge Analytica — or the practice of psychographic targeting itself — ultimately made the difference in the outcome of the US election. That question is now occupying legions of politicos, data scientists and investigators. But the emergence of these practices in the world of political campaigns is noteworthy, and a bellwether for online persuasion in general.

So what is psychographic targeting anyway?

Political campaigns are increasingly indistinguishable from ad campaigns, and targeted online ad buys have been standard practice for many years. But they have historically been based on demographics (gender, race, education level, and so on). Platforms such as Facebook and DoubleClick enable ad buyers to target viewers based on these demographic characteristics. So, for instance, an 18-year-old woman will be served a different political ad than a 54-year-old man.

Now, companies such as Cambridge Analytica are emerging as middlemen to help clients target and tailor appeals to each individual, based on their actual psychological attributes. This is called “psychographic targeting.”

Cambridge Analytica uses a 30-year-old personality model called OCEAN (scoring across indicators of “openness, conscientiousness, extroversion, agreeableness, neuroticism”). It provides a fairly reliable predictor of a person’s motivations, needs, and fears — and, by extension, the kinds of advertisements and appeals that are most likely to be effective.

Psychographic targeting is more viable than ever, because OCEAN profiles can be statistically inferred from the publicly available data that people willingly give away on social networks — including posts and likes. For a political campaign, this creates interesting possibilities for persuading specific pockets of voters based on their specific personality traits.

In a story describing the Trump campaign’s online data operation, Forbes revealed that “on the day of the third presidential debate between Trump and Clinton, Trump’s team tested 175,000 different ad variations for his arguments. The messages differed for the most part only in microscopic details, in order to target the recipients in the optimal psychological way: different headings, colors, captions, with a photo or video.”

Vice’s Motherboard describes how Cambridge Analytica CEO Alexander Nix demonstrated the power of psychographic targeting to an audience in New York based on an example related to gun rights: “For a highly neurotic and conscientious audience the threat of a burglary — and the insurance policy of a gun” a person would be served an ad with “the hand of an intruder smashing a window.” Conversely: “people who care about tradition, and habits, and family” would be served an ad that “shows a man and a child standing in a field at sunset, both holding guns… shooting ducks.”

We are entering a strange new era of politics and algorithmic persuasion, made possible by the native business model of the internet.

From mass media to me media

More and more, the Cambridge Analyticas of the world are using commercial internet data to personalize appeals, shift opinions, and ultimately shape politics itself. The comparatively simple personalization of today — that is, the fact that you passively receive advertisements intended just for you — is becoming the foundation of a new approach to online communication. From the relatively innocuous targeted banner or pre-roll ad, we are moving into a world in which personalization is more magic and less detectable, thanks to developments in AI and computer graphics and advertisers’ ability to pay for eyeballs on demand.

Imagine a future, enabled by psychometrics and real-time compositing, where all media adapts itself to the specific viewer. If a BBC documentary on endangered species leaves a viewer insufficiently moved, perhaps the documentary should adapt its color grading and music until it finds the right emotional knob? If a war documentary is too brutalizing for a particular viewer, perhaps the documentary should read the viewer’s expression and skip the footage where the bomb goes off in the marketplace? (The BBC is in fact one among many organizations exploring how to enable this kind of personalization — its R&D unit is working on an initiative called Visual Perceptive Media, which aspires to “personalised video which responds to your personality and preferences.”)

Now think about this kind of technique applied in the realm of politics. The consequences of personalization could be quite dark if such techniques were to be applied ubiquitously or unethically.

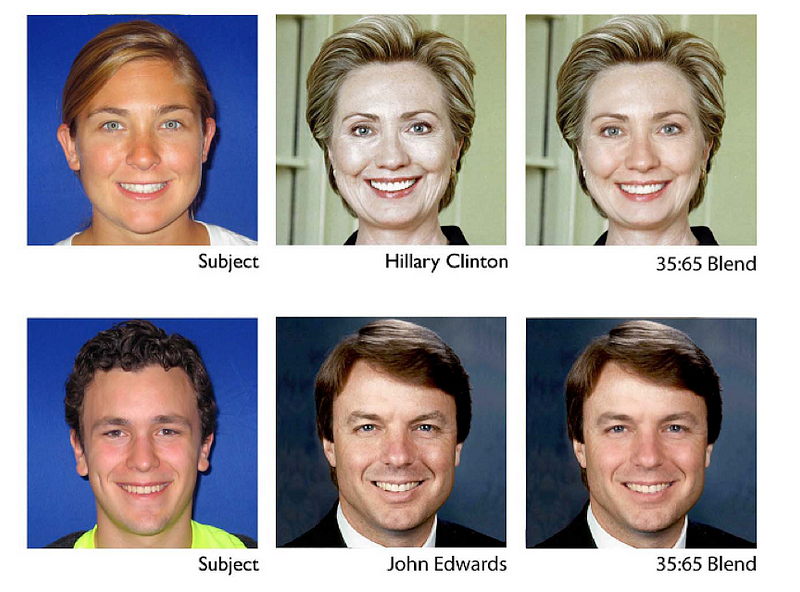

An experimental study in 2004 delivered tailored political ads to test subjects. In the ads served to the test group, presidential candidates’ faces were subtly blended with facial characteristics of the viewer. It turns out that if you show someone a political ad for George W. Bush, with their own facial characteristics blended in, they are more likely to want to vote for him (or any candidate, for that matter). Advertising that uses personalization for this kind of subliminal effect could be quietly deployed across social media in a not-too-distant future — and would not be too far removed from the data-driven persuasion and targeting techniques that prop up the web economy today. Just wait until these technologies collide with the fake news economy, where there is even less accountability.

These are important developments with important social ramifications. They must be communicated to the public and opened to public debate. But given the abstract nature of these problems, what are social storytellers to do? The only choice, arguably, is to adopt these same techniques as the persuaders: To use personalization as an explanatory device, and to use personalization to become more persuasive.

Baby steps

Why shouldn’t storytellers be using all the techniques that have made the rest of the web economy work, and applying them to documentary film online?

What would it mean to apply these personalization techniques to documentary? Might they allow interactive producers to make social issue storytelling more persuasive? We can consider a few common tropes that might emerge:

- Motion graphics — Producers might show viewers personalized statistics to put their own experience in broader context. A simple widget that reports on the minimum wage in the viewer’s state could be animated into a more filmic narrative about income inequality, which would presumably make for more relatable and engaging viewing.

- Scene selection — Producers might play with narrative structure by affecting scene order depending on who’s watching. In a documentary about steelworkers, imagine there’s a specific subject who deeply clicks with 15 percent of viewers and strikes as a very sympathetic figure. But 85 percent of viewers can’t stand him, get distracted and tune out. In a conventional documentary, there’s a hard creative choice to be made. Perhaps it’s possible to have it both ways?

- Voice-overs — Producers might lean on computers for help with narrative voiceovers. Dynamically generative scripts may be read by modern synthetic voice synthesis in lieu of people in a recording booth. Could computerized narrators customize the script and address viewers directly, increasing the emotional and persuasive power for that demographic? Alternately, might producers automatically select from a number of pre-recorded voiceovers? What if the relevance of an online story could be broadened by recording multiple master narratives with diverse narrators, and selecting the narrator most appropriate for each viewing? Perhaps some viewers will respond better to an elderly Asian woman narrator, whereas others will stay spellbound by a 15-year-old narrator?

There are immense creative possibilities to be explored.

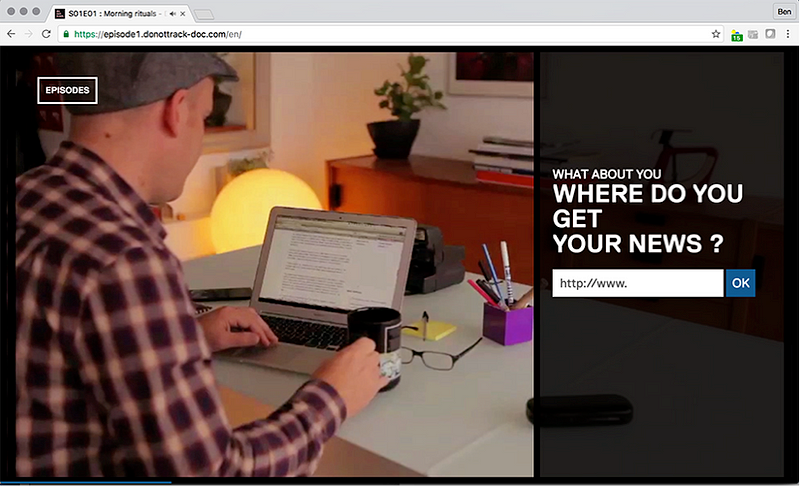

Some say that this production style is technically unfeasible and won’t work. If that’s you then I’d suggest that you take a look at Blast Theory’s Karen app (2015), Sandra Gaudenzi’s Digital Me (2014), or Brett Gaylor’s Do Not Track series (2015). All of these lean on personalization to tell a more persuasive story.

Whither the author?

Others worry that personalized media requires the auteur to cede authorial control. These ideas are especially offensive to the conservative, old guard of documentary filmmakers who already regard the web suspiciously because it has thoroughly disrupted film finance and distribution. To these traditionalists, targeted and procedural media defeat the whole purpose of quality storytelling.

But it can be argued that procedural storytelling offers even greater authorial control, because it enables an evolution from “one-to-many storytelling” to “one-to-one storytelling with many people.” Personalization, in theory, offers even finer-grained control of the message and experience for an individual viewer.

Could computer-enhanced narratives ever truly feel as intimate as finely tuned works? Won’t these stories feel somehow disingenuous and hollow? This is a question that can only be answered through years of experimentation.

As the sophistication and seamlessness of personalized storytelling significantly improve in coming years, the ethics of personalization is something the field will need to seriously grapple with. Ubiquitous personalization represents a new frontier of propaganda and could be a threat to people’s cognitive security.

This is an issue that transcends consumer protection on the internet, and it is bigger than the present discourse around privacy and surveillance. What can be known about internet users is one thing; what can be done with this information to manipulate them is entirely another. Many interests, not just advertisers, will be using targeting and personalization to exploit people’s individual cognitive biases and weaknesses. This has significance for public opinion across nearly every social issue imaginable. This is the brave new data-driven world into which we are headed.

A large part of the responsibility to explore the social consequences of these changes will fall to journalists and documentary storytellers — and their stories will need to capture the attention and the imagination of the public at least as effectively as well-resourced commercial and government interests. It won’t be enough for documentary storytellers to keep an eye on the watchers. In a sense, they’ll need to create documentaries that watch their viewers.

Ben Moskowitz is an advocate for open standards, content and technology. Ben teaches interactive design and storytelling at the Interactive Telecommunications Program at the New York University’s Tisch School of the Arts and is Visiting Associate Arts Professor at NYU Shanghai. As former Director of Development Strategy at the Mozilla Foundation, he has held senior roles leading the design, implementation and management of public interest media productions and video technologies. He is the founder of the Open Video Conference, a forum to coordinate free and open technologies, policies and practices in online video.

This piece appears in issue #10 of Immerse, which was curated by the editors of i-Docs: The Evolving Practices of Interactive Documentary (Columbia University Press, 2017), and was adapted in part from one of the book’s chapters. Discover other stories in this issue here.

Immerse is an initiative of Tribeca Film Institute, MIT Open DocLab and The Fledgling Fund. Learn more about our vision for the project here.