Examining the use of voice models from “Val” to “The Andy Warhol Diaries”

Still image

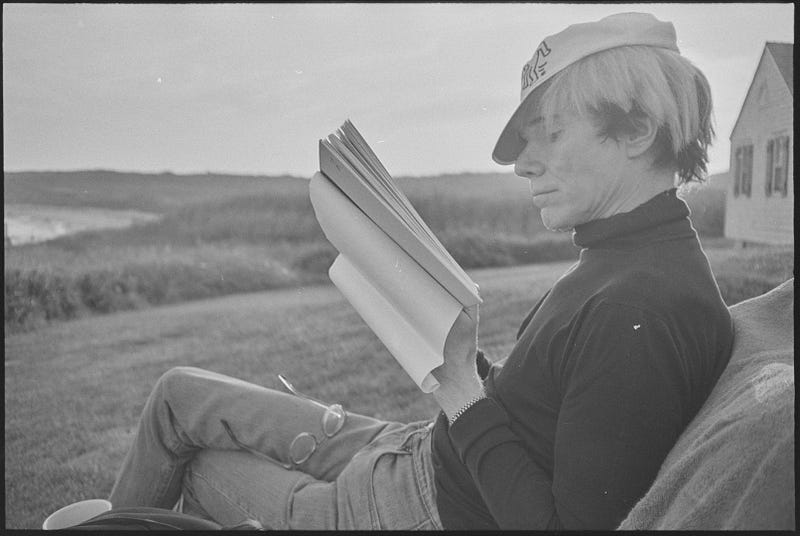

from The Andy Warhol Diaries

Still image

from The Andy Warhol DiariesIn the summer of 2021, mass audiences got an abrupt introduction to audio deepfaking thanks to the controversy over the documentary Roadrunner: A Film About Anthony Bourdain (2021). To provide narration for several lines from deceased subject Anthony Bourdain’s private writings, director Morgan Neville and his crew used AI software to generate a digital facsimile of Bourdain’s voice. (The software employed has not been disclosed as of this date.) By doing this without the permission of Bourdain’s widow, nor any disclosure of its implementation, Roadrunner aroused conversation around the ethics and aesthetics of this technology. Such conversations will only continue as more media incorporating artificially-generated audio continues to come out. Several other recent documentary projects made similar use of synthetic voices; while they didn’t raise as many eyebrows as Roadrunner, they deserve just as much scrutiny.

From actors to “voice models”

Val (2021) is a biography of actor Val Kilmer, known for his roles in Top Gun, Batman Forever, Heat, and much more. Since Kilmer’s voice had been drastically altered by a recent battle with throat cancer, the film took a traditional documentary workaround to give voice to his thoughts, employing a soundalike to read his words as narration. For an added bit of poignancy, that soundalike was Kilmer’s son, Jack, who sounds fairly close to the way Kilmer did in his Top Gun days.

Not long after production wrapped, the crew reached out to British AI company Sonantic to create a “voice model” for Kilmer, which would help him communicate going forward. The model is similar in principle to preexisting speech-generating devices, but rather than produce a robotic or generic voice from the input text, the speech will instead sound like Kilmer’s. While Kilmer has been dubbed by other actors for roles taken since his tracheotomy, audiences will be hearing the model at work in November’s Top Gun: Maverick (2022). This case reflects the more optimistic potential for this technology, as a natural evolution of speech generators used by disabled people for decades that facilitates communication in voices that better reflect their users.

In most cases, however, the implementation of audio deepfaking in film receives mixed-to-negative responses. The most high-profile example yet came with the Disney+ Star Wars series The Mandalorian (2019) and The Book of Boba Fett (2021). The character of Luke Skywalker, originally portrayed by Mark Hamill in the films, appears entirely via a mixture of different computer-generation tools. A de-aged rendering of Hamill’s face is placed on the body of a double, while Luke’s voice is created entirely through AI software. That audio was created by Respeecher, which has recently also been utilized to resurrect the likes of Vince Lombardi and Manuel Rivera Morales for sports promotions. Many commentators reacted poorly to the deepfaked Luke, with BoingBoing pointing out that electing to use an algorithm over Hamill’s own voice is especially odd, given that he is a prolific voice actor (and, of course, still alive).

It’s fascinating that Respeecher’s catalog of success stories includes Luke Skywalker alongside deceased sports figures, whose appearances in commercials evoke the recurring trend of using CG recreations of celebrities like Fred Astaire and Audrey Hepburn in advertising. AI techniques seem to have brought this fad back into vogue. Since our culture trades heavily in iconic figures and nostalgia, it makes sense that so many resources are invested in trying to better imitate these icons. (And in the case of Disney, since the icons are lucratively trademarked, it’s in their interest to find ways to preserve them past the deaths of their original portrayers.)

Case study: The Andy Warhol Diaries

This March saw the release of The Andy Warhol Diaries (2022), a Netflix docuseries narrated by a Warhol voice generated by Resemble AI, which read excerpts from the pop artist’s voluminous memoirs. (This production received the permission of the Warhol estate to use the tech, as multiple disclaimers inform the viewer.) In press notes, director Andrew Rossi explains that he wanted to “communicate that experience of having such an intimate connection to Andy, without having an actor in the middle mediating that relationship.” He also believes this move to be in keeping with Warhol’s philosophy, citing the artist’s expressed desire to “be a machine” and his lifelong interest in creating avatars and alternate personae in his work.

We can’t know for sure what Warhol would think of the series’ “AI Andy,” but Rossi’s justification is interesting for a different reason — the implicit claim that this use of AI is somehow not mediating the relationship between Warhol and the audience. In a statement to Immerse, Resemble AI founder and CEO Zohaib Ahmed said, “The creative team wanted to add as much authenticity to the docuseries [as possible], and thought that an AI representation of Andy Warhol would create a compelling and immersive experience.” The assumption is that the viewer more readily accepts this voice than they would a soundalike or some other stand-in — the same logic that leads to creating an all-AI Luke Skywalker rather than simply recasting him.

Yet this lengthy process is, if anything, more mediated. It consisted of a great deal of tinkering with the algorithms, tones and pitch, and innumerable other tiny details. It was all built on a mere three minutes’ worth of usable audio. Additionally, it did involve a performer: character actor Bill Irwin read Warhol’s lines as a reference for the technicians. In an email back-and-forth with Immerse, a press representative confirmed that Irwin read “all the lines in Andrew [Rossi]’s script.” The result, then, is something like an audial version of an Andy-Serkis-esque motion capture performance, with the digital tools’ result informed heavily by human intervention.

And even with the nuances added by the sound engineers and Irwin’s guidance, the AI Andy has the same issue as Skywalker and many other deepfaked voices. It echoes from the uncanny valley, full of flat pronunciations and odd waverings that undermine the attempt at realism. Though futurist boosters forecast that soon these kinks will be ironed out, the fact that Resemble AI needed to bring in Irwin suggests it could be harder for the tech to generate convincing voices than they claim. It also makes one wonder how much of deepfaking may be a Potemkin situation. This is difficult to gauge, of course, since much of the process remains opaque to outsiders.

Ahmed told Immerse that some of the most frequent purchases of his service were for the sake of “Saving voice actors the time and expense of traveling to studios,” or “Help[ing] customer service teams … address the need for language translation quickly and cost-effectively,” or “Keeping up with new gaming releases, so the audio stays relevant.” Despite the artistic possibilities here, it seems that as usual, industry demand for shortcuts is doing more to drive this technology’s development than anything else. For documentary filmmakers, that leaves a lot more experimentation and discovery to be done.

For more news, discourse, and resources on immersive and emerging forms of nonfiction media, sign up for our monthly newsletter.

Immerse is an initiative of the MIT Open DocLab and Dot Connector Studio, and receives funding from Just Films | Ford Foundation, the MacArthur Foundation, and the National Endowment for the Arts. The Gotham Film & Media Institute is our fiscal sponsor. Learn more here. We are committed to exploring and showcasing emerging nonfiction projects that push the boundaries of media and tackle issues of social justice — and rely on friends like you to sustain ourselves and grow. Join us by making a gift today.