What Can the First Years of VR Nonfiction Tell Us About Its Future?

by Chris Bevan

A word cloud

generated from the titles of 530 VR nonfiction pieces released 2012–2018

A word cloud

generated from the titles of 530 VR nonfiction pieces released 2012–2018VR Nonfiction — A Mediography is an interactive database of virtual reality nonfiction content covering the period 2012–2018. Our database charts the emergence of VR nonfiction on a timeline, placing the release of content within the wider context of international film festivals and key milestones of VR technology development. In this article, we talk about the process of identifying and cataloguing this content before highlighting some key findings from the first six years of VR nonfiction.

Since 2012, producers of VR nonfiction have created diverse works encompassing a variety of forms, from linear 360° video to interactive room-scale CGI experiences. Other works have gone further still, mixing elaborate real-world sets, human actors, and multi-sensory apparatuses to create installation-based pieces that blur the boundaries between cinema, games, and immersive theatre.

Over the last 24 months, the Virtual Reality: Immersive Documentary Encounters project has been systematically identifying and collating VR nonfiction content to create a mediography — an online interactive timeline charting the history of work in the medium.

Beginning with Nonny de la Peña’s groundbreaking 2012 piece “Hunger in LA,” our collection now numbers over 530 titles. With the ultimate aim of creating a comprehensive record of titles in English, we have cast our net wide — scouring the programmes of over 70 international film and documentary festivals alongside extensive organic search, social media, and the catalogues of a range of content aggregators including WITHIN, Jaunt, and The New York Times.

The metadata we are collecting is also providing us with new insights into how producers are exploiting the affordances of the medium. In 2018, we presented the first research output from the mediography project in Glasgow at the International Conference on Human Factors in Computing Systems, the leading conference on Human-Computer Interaction. In our paper Behind the Curtain of the “Ultimate Empathy Machine”: On the Composition of Virtual Reality Nonfiction Experiences, we performed a deep-dive examination of 150 VR nonfiction titles, identifying 64 characteristics of the medium including aspects of visual/audio composition, viewer role, and interaction.

VR nonfiction content typically presents as one of two forms: live-action 360° video and computer-generated (CGI) pieces that are typically created using a game development engine such as Unity. Although there are increasing levels of overlap, the differences in skill-set, personnel, and workflow across these two production methods remain considerable. At least for the time being, it is useful to consider them separately.

Our deep-dive analysis has also revealed key differences between these two forms. For instance, we found that 360° video-based content tends towards a model of assigning the viewer the role of passive observer, more often than not adopting an objective omniscient viewpoint. This appears contrary to its early promise to allow viewers to “‘stand in the shoes of others.” On the other hand, CGI based VR nonfiction, while exploring a broader range of viewpoints and viewer roles, lacked interactivity to a level that surprised us: only 8% of CGI-based titles provided for active participatory involvement in the story beyond movement of the head.

How has VR nonfiction developed so far, and where is it heading?

Our dataset of 530+ titles reveals that 70% (380 titles) of VR nonfiction content released between 2012 and the end of 2018 were entirely — or predominantly — linear 360° video (i.e. non-interactive beyond head tracking, with small amounts of CGI content in cutscenes and titling accepted). The remaining 30% (160 titles) consisted of CGI-based titles with varying degrees of interactivity.

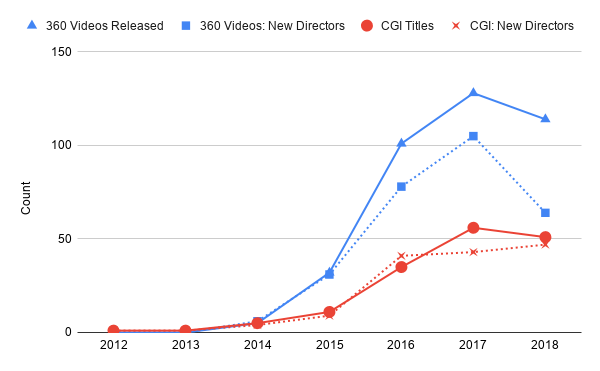

To understand how these two types of content have developed over time, in fig. 1 below we show the number of releases for each type of content over the 2012–2018 period against the number of new content directors entering the market.

Fig. 1: New

title and new entrant director counts for 360° video and CGI-based VR nonfiction, 2012–2018.

Fig. 1: New

title and new entrant director counts for 360° video and CGI-based VR nonfiction, 2012–2018.

We draw attention to two points of interest in this data. The first is that, following a sustained period of rapid year-on-year growth, 2018 saw the first signs of a downturn in raw content numbers and new-entrant directors for both types of media. While we must first stress that our content collection for 2018 is not yet as thorough as it is for previous years, we also note that increasingly fewer new titles are emerging from our recent searches. We, therefore, anticipate the final picture to indicate a leveling-off for CGI and a slight decline in 360° video.

The second point of interest is that, following a spike in new directors of 360° video content entering the market in 2016, this number dropped sharply in 2018. Across the full dataset, we have identified 430 unique directors of VR nonfiction content. However, looking at their output over time, we see that the vast majority of these directors — 81% — have only released one piece of work. Despite the rapid influx of new content in the first six years, it is striking that the number of directors in VR nonfiction that have since gone on to direct three or more pieces is just 38 (9%).

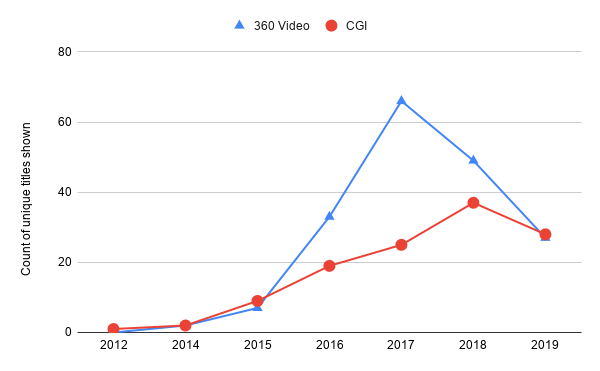

A similar picture emerges when we examine VR nonfiction in our film festival data. In fig. 2 below, we plot the number of VR nonfiction titles that were released for each year and shown at international film festivals, again splitting across linear 360° video and CGI-based works (titles that are shown at multiple festivals in the same year are only counted once). In this case, we do have extensive data for 2018 and have also included data for 2019 based on the programmes of 10 leading international festivals for VR nonfiction content in that year.

Fig. 2: VR

nonfiction titles shown at international film festivals, 2012–2018. A full listing of the festivals

covered up to 2018 can be seen at http://vrdocumentaryencounters.co.uk/vrmediography/festivallist/

Fig. 2: VR

nonfiction titles shown at international film festivals, 2012–2018. A full listing of the festivals

covered up to 2018 can be seen at http://vrdocumentaryencounters.co.uk/vrmediography/festivallist/

Here we can again see the spike in 360° video content on the 2017 film festival circuit. Here, however, the spike is even more pronounced, and is followed by a similarly dramatic cooling in 2018/19. For the first time since 2015, 2019’s festival output appears to be fairly evenly split across the two forms.

To understand what went on in the film festivals of 2017 and what this might mean for the future of these two forms of VR nonfiction, the mediography reveals several key events from the preceding two years. The first is that, since the premiere of Hunger in LA at Sundance 2012 (widely accepted as the first showing of a VR nonfiction piece at an international film festival), multiple international film festivals had been quietly starting to pick up VR nonfiction content on their programmes. By this point, new CGI-based content was slowly beginning to emerge from creatives working with the “developer kit” model of the Oculus Rift and also from others experimenting with 360° video-based experiences. By 2015, Sundance showed six pieces of VR nonfiction. Two appeared at Tribeca, three at Sheffield Doc/Fest, and seven were shown at IDFA.

A second key event in 2015 was the release of Clouds Over Sidra. This nine-minute 360° video piece — a story of displacement in the then-raging civil war in Syria — went on to generate considerable international press attention, with particular focus given to how VR seemed able to create a heightened sense of empathy between audience and subject. Chris Milk, the creator of Clouds Over Sidra, would later describe VR as the “ultimate machine for empathy”— a claim challenged in the first installment of our Immerse article series by my colleague Harry Farmer.

Throughout 2015, interest in VR quickly spread among the filmmaking community. Creatives who wished to explore 360° video production were further supported by the arrival of specialist 360° cameras such as Nokia’s “OZO,” significantly reducing technical barriers and costs to entry. Release numbers for VR nonfiction titles rapidly increased, jumping from just nine in 2014 to 43 in 2015, with the number of new content directors also increasing by 300% from 10 to 40. By November of that year, The New York Times had released its own VR nonfiction content platform NYT VR, supplying free Google Cardboard headsets to over one million of its subscribers.

By 2016, following TechCrunch’s prediction that 2016 will be the year of VR, the consumer model of the Oculus Rift launched, with HTC releasing its room-scale Vive platform one month later. In May, Oculus responded directly to the growth in VR nonfiction content by launching VR for Good — a programme providing funds and mentorship to content creators interested in exploring the pro-social potential of VR. By the end of the year, the release of VR nonfiction titles had tripled, from 43 to 136 — an expansion particularly marked in the number of 360° video-based releases. New directors entering the scene also tripled, from 40 to 119.

By 2017 then, all of the stars were aligned. In that year, we saw VR nonfiction content production reaching a peak, with multiple film festivals showing record numbers of VR nonfiction content. However, when we come to examine the type of content being shown, it is clear that there was a heavy emphasis on 360° video over CGI. This distinction is important. While the overall numbers of 360° video-based titles has only declined slightly in the years since 2017, the number of these titles being picked up and shown at film festivals appears to have dropped dramatically.

So what does this tell us about the future of VR nonfiction?

A straightforward conclusion to draw is that 360° video-based VR nonfiction has passed through a bubble of rapid early-adopter interest and is now beginning a period of rationalisation. CGI-based nonfiction content, on the other hand, has displayed a slower but fairly consistent growth, and — if the healthy and diverse showing at this year’s Venice Biennale is any indicator — may well be the main source of growth for the medium over the next few years.

However, as Ingrid Kopp has discussed in a previous issue of Immerse, the way in which VR nonfiction is distributed and exhibited in the coming years will be key to what happens next for both forms. While VR content now appears to be well established at film festivals, we are now beginning to see more augmented and mixed reality-based nonfiction content vying for audience attention — often sharing the same programmes.

Further, the immediate future of the in-home VR nonfiction market remains far from clear. It is notable, for example, that several key players from 2014–2018 have now wound down or pivoted their attention to other forms of XR. Notably, Jaunt — one of the earliest adopters of 360° video — switched its attention to focus on augmented reality-based content in October 2018, and in the UK, early adopters such as the BBC have now wound down its VR production.

This may seem rather downbeat, but we must be mindful that such activity is not entirely unexpected; early enthusiasm for any new medium tends to give ground as the longer-term underlying challenges become more salient.

Whether VR nonfiction surpasses the heady heights of 2017 remains to be seen, yet the rapid pace of innovation in VR, particularly in live-scene volumetric capture, provides ample opportunity to suggest that the best of the medium remains ahead of it, not behind. Nonny de la Peña’s innovative Reach platform, for example, promises CGI-based content generation and distribution in a single centralised package that will hopefully reduce many of the barriers to entry that have dogged the CGI side of VR in its early years. Indeed, who would be better placed to guide the next stage of VR nonfiction development than its pioneer?

What’s next for the Mediography project?

As an ongoing piece of work, we want the VR Mediography project to be a useful tool for both researchers and practitioners in VR nonfiction. In the short term, we will be working to complete our collection and encourage readers to contact us about any content we might have missed. Similarly, we are continually working on expanding the search capabilities of the database and welcome suggestions at: mediography@vrdocumentaryencounters.co.uk.

This article is part of Virtual Realities, an Immerse series by researchers on the UK EPSRC funded Virtual Realities; Immersive Documentary Encounters project. This project is employing an interdisciplinary approach to examining the production and user experience of nonfiction virtual reality content. The project team is led by Professor Ki Cater (University of Bristol), Professor Danaë Stanton Fraser (University of Bath) and Professor Mandy Rose (University of the West of England) with researchers Dr. Chris Bevan, Dr. Harry Farmer and Dr David Green and administrative support from Jo Gildersleve. For more information see http://vrdocumentaryencounters.co.uk.

Immerse is an initiative of the MIT Open DocLab and The Fledgling Fund, and it receives funding from Just Films | Ford Foundation and the MacArthur Foundation. IFP is our fiscal sponsor. Learn more here. We are committed to exploring and showcasing media projects that push the boundaries of media and tackle issues of social justice — and rely on friends like you to sustain ourselves and grow. Join us by making a gift today.