Artificial intelligence provides a profound shift in how we understand ourselves. Algorithms generate new maps for how we make sense of the world. We are reproducing ourselves through technology, creating tools which reflect our own image — augmenting our realities through big data software, global interconnectivity, and global consciousness. We have given our cognitive biases, values, and misunderstanding over to math-powered applications that increasingly manage our lives. This changes our stories.

Machine learning, a core branch of artificial intelligence, is a field driven by data—and data, by its nature, is based on the past and the assumption that patterns will repeat. This determines and relies on the future being the same as the past. Generated and collected data inform a machine’s cultural context, how it processes the world around us and creates its memory structure. A machine is fed by human preferences, choices, and behaviors—and repeats them based on the data amassed. On a larger scale, the machine learns from systemic paradigms and prejudices, ensuring that the same voices will be heard to the exclusion of others.

Even with the best intentions, applications made by fallible humans are driven by the data economy. The current way we run businesses and organizations has been stretched to the limit. We are always yearning for more: faster and more centralized production, greater accuracy, and “out of the box” solutions. Western culture contains a strain of hyper-paranoia and this cultural context is being implemented into intelligent machines. The machines’ learning models and the decision-making processes can be considered the genetics of our technology.

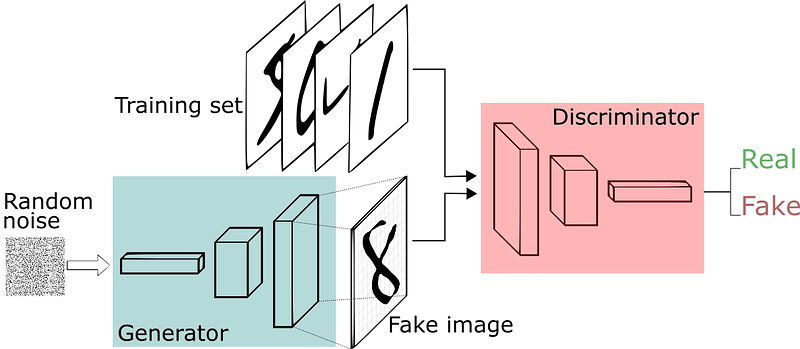

Take generative networks, such as GAN, as an example: one model is trained to generate data and the other model tries to classify if an input image is real or generated. That means these two networks are locked in a schizophrenic battle — one model is attempting to separate real images from fake while the other is trying to trick and convince the model that they are all real. The resulting images are nearly impossible to tell apart from the originals.

A sketch of

general GAN model by Thalles Silva

A sketch of

general GAN model by Thalles Silva

Or think about this in the context of the reinforcement model used in video games, a popular method in machine learning. We reward hyper-accuracy and actions that we would not reward in real life.

We are quickly traveling into the age of over-complex artificial intelligence and machine learning, powered by abstract mathematical structures that work behind the scenes, invisible to the public. These multi-level models are obscure and opaque. We ask our machines to “observe” the data rather than manually compute it, to examine volumes of images and paragraphs in multiple languages, and to draw its own conclusions. Inner elements interconnect, grow and change with no supervision, and sometimes without an understanding of how or what is being affected by space and time. This idea undermines the concept of artificial, because AI processes become more natural and organic. Invisible layers form into dysfunctional, unexplained, and unpredictable states — dark states. When this happens in the human mind, we refer to it as mental disorder. Can we explore dark states as a representation of the machine’s entity?

After all, like the mind, technology is an outcome of our society and is not a result of an innocent process. It’s a known fact that mental disorders have existed since the beginning of recorded history. Therefore, we should assume that they are ubiquitous and an inseparable feature of intelligence in all forms. Dysfunctionality is inherited in every complex system, organic or digital. It is a part of its construction and cannot be considered a bug or a glitch but a multilayered mess, leading to unexpected behaviors, for better or worse.

I believe that if machines have mental capacities, they can also have the capacity for mental illness. Here are 5 reasons why, which I outlined for the Becoming Human blog:

5 reasons why I believe AI can have mental illness I am not a Machine Learning coder. I think about AI as a social phenomena rather than a technical one, and see…becominghuman.ai

Surprising and unpredictable code segments should be embraced as particularly informative clues about the nature and consequences of the philosophical tensions that generate them — technical problems are philosophical problems. We need to act with humility in the face of these systems that are so difficult to design, and feel comfortable with muddling through that. We can start by defining or acknowledging what we don’t know.

Broadening the conversation

In a time of technological advancement, we don’t often create space for deliberation without the ultimate goal of making something to support human needs quicker, faster, and for profit. We need to take time to think outside the pipeline, to consider the philosophical and emotional implications of rapidly advancing technology, to bring together minds from outside the tech industry and bring about new approaches and premises.

Along with my producing partner Emma Dessau, I want to create a space for consideration and experimentation, to generate ideas that are accessible to the public, widen the conversation, and bring in new voices to the development of AI. Housed at the MIT Open Documentary Lab and in collaboration with Sarah Wolozin, director of the MIT Open Documentary Lab (ODL), we will host meetings to foster dialogue, collaborations and research for media-makers, artists, journalists, researchers, and anyone with a shared interest.

If we want to travel further into the era of cognitive machines, we will need to let machines explore all by themselves, do weird things, not just act in accordance with our wants and desires. It is our responsibility to recognize intelligent systems are evolving in ways we don’t always understand. As the Danish author Tor Nørretranders writes, “We think about machines as rational, cold-blooded, and selfish. Therefore we treat them as such.” But what if we would engage in a different narrative?

If you are interested in hearing more about our group, contact us at MSAIresearch@gmail.com

This post was written together with Emma Dessau ❤

Immerse is an initiative of Tribeca Film Institute, MIT Open DocLab and The Fledgling Fund. Learn more about our vision for the project here.