Runway’s pivot from machine learning to browser-based editing software sparks this reflection on the future of video editing

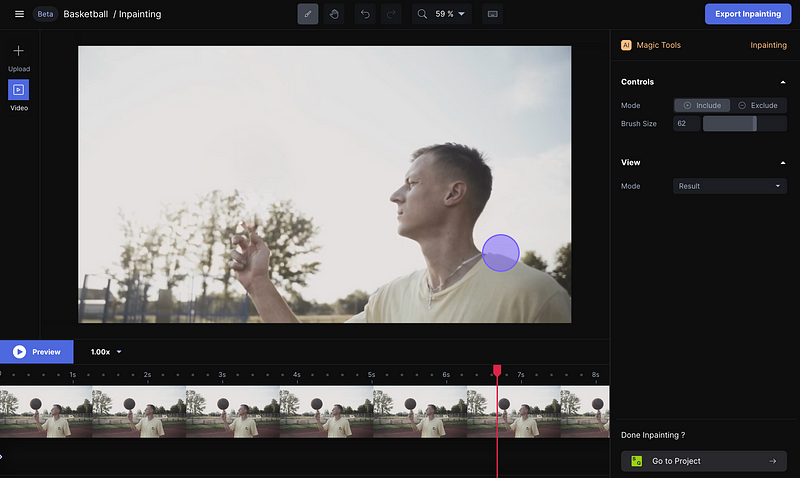

Screenshot

from Runway’s browser-based video editing platform, using the inpainting function to remove a

basketball from the shot

Screenshot

from Runway’s browser-based video editing platform, using the inpainting function to remove a

basketball from the shotA couple years ago, I used Generative Adversarial Networks (GAN) to create a sequence that had previously only existed in my mind: a turtle trying to evolve into a different species, but it was stuck somewhere in between, each form resembling a distant relative of a turtle. At first, I didn’t know what tool could help me make this sequence. Scrolling through my social media feeds, I came across videos where images transition into each other seamlessly. This technique would have been ideal for the sequence in mind but I didn’t have the technical knowledge to create it myself.

That’s when I came across Runway, a user-friendly machine learning software that gave artists the tools to create AI-generated imagery. I created the initial turtle and morphed the image into many different variations by changing some of the parameters of the network to create a “latent walk”, a journey in the vector space of the image network. It resulted in this brief shot from Our Ark (2021):

With this tool, you could generate images and image sequences, train your own data sets, experiment with the working logic of GANs and visualize the impact of high-dimensional spaces.

Last December, Runway raised $35 million dollars in their Series B funding. Then, the company pivoted into a browser-based video editing platform. This was a curious move — isn’t video-editing software already a saturated field? How is machine learning related to video editing?

This move provides some insights here into where video production is heading in the near future. In gaming, instead of running certain games on your own device, you can now “stream” games which run on distant servers via cloud gaming subscription services. Similarly, moves like Runway’s indicate that video editing is moving into the cloud. Normally you’d need a strong editing computer with fast GPUs to edit and composite 4K footage. With cloud editing, strong computers in a remote server room would do the heavy work as you edit on a browser with a simple computer. A cloud-editor could be someone who edits and does visual effects (VFX) work anywhere with any computer.

The other insight concerns the confluence of machine learning and VFX. Runway is just one team that is applying its experience with ML into the field of VFX. For example, with the datasets they trained, image segmentation or rotoscoping (the process of separating objects or characters from the background) is possible with just a few clicks. Until recently, these actions had to be done frame by frame. It is also possible to easily remove an object with a technique called inpainting. The computer automatically paints the background as if the object or the person never existed. For Cristóbal Valenzuela, CEO of Runway, AI “is enabling [users] to edit and generate video in ways that were unimaginable before.”

The film editing process changed a lot with the transition to digital, and will change drastically again as we start to use AI tools. Runway is not the only such project. For example:

- Jan Bot, an editing algorithm that scrapes the Eye Film Institute’s archive and automatically creates videos

- Kaspar, an AI assistant editor that tags, pulls selects and creates metadata

- Tools to edit video interviews by just editing the text, such as Reduct

- Text-to-video, such as OpenAI’s Clip (and this music video created with it)

- Videos that are generated by AI, such as the work of Casey Raes, Anna Ridler and Refik Anadol. Anadol, in particular, is popularizing the term “latent cinema” to describe this genre, but the term is also trademarked to his company

All these changes prompt us to reframe the practice of creating moving images. In her “State of Cinema 2021” essay, Nicole Brenez, curator of Cinémathèque Française, writes that “technical images have invaded the universe.” These images are created by computers, algorithms, technology, and mathematics. They are images that explain and operate on society. We have been using the tools of cinema, especially editing, to study and analyze these images by forming new relations between them. But now we are going beyond technical images — what about technical cuts? Editing decisions that are made by artificial intelligence? How should we deal with these computer-generated relations between images?

Scrolling through my algorithmically-generated social media feeds, I realize that what I’m looking at is a collection of technical cuts. Everyday, we are dealing with image sequences that are generated for us, and whose structuring logic we can’t understand. The relations these images have with each other is opaque. We try to make sense of them all day every day. We have already stepped into the world of AI-editing.

For more news, discourse, and resources on immersive and emerging forms of nonfiction media, sign up for our monthly newsletter.

Immerse is an initiative of the MIT Open DocLab and Dot Connector Studio, and receives funding from Just Films | Ford Foundation, the MacArthur Foundation, and the National Endowment for the Arts. The Gotham Film & Media Institute is our fiscal sponsor. Learn more here. We are committed to exploring and showcasing emerging nonfiction projects that push the boundaries of media and tackle issues of social justice — and rely on friends like you to sustain ourselves and grow. Join us by making a gift today.