Why AI is not the future, WE are.

The Storyteller from the Future

(a.k.a Karen Palmer) developing algorithms covertly. Photo Kevin Barry

The Storyteller from the Future

(a.k.a Karen Palmer) developing algorithms covertly. Photo Kevin Barry[TRANSMISSION LOG #7054—Origination year 2048: Destination 2018]

If you are receiving this broadcast, you are the intended receiver! I am the Storyteller from the Future and I have come back to enable you to survive what is to come.

I am transmitting this stream of data for you today as this is a pivotal moment in your planet’s timeline. Your planet is experiencing unprecedented global social unrest grounded in race and the economy. Simultaneously, your world is on the cusp of a new frontier of immersive technological and media experiences that will not only entertain but influence your kind in an unprecedented manner: psychologically, consciously, and neurologically.

Why should I believe you? Is the question I hear you asking.

As a result of prior incidents when details from my time have been transmitted back into yours, stipulations have been placed upon what can be communicated. But I will just give you an insight to where your driverless cars may be driving some of you to.

In the future, automated technology, facial recognition, and AI will often be exploited for suppressive means. For example, if you have an outstanding warrant, even minor, when you get into your Uber driverless vehicle, it will be secured, locked down, and you will be driven directly to the associated police station.

You see, the future technology will be used as an extension of oppression and racism. You have seen the beginning of this recently in your news with the advent of Amazon selling facial recognition software, Rekognition, to law enforcement. While the press covered the story of an open letter by employees and shareholders demanding that Amazon stop…well, this story will quietly disappear. Such special relationships between global tech corporations and law enforcement will only happen now behind closed doors in order to prevent resistance. This will mark the beginning of a new era of unethical and inequitable technology and governmental partnership.

But let me not deviate from my objective in this data stream. Let me start at the beginning: My past! Your present!

Wait, they are coming for me! The perimeter of this safe quadrant has been compromised …

[TRANSMISSION LOG #7054: Terminated]

[TRANSMISSION LOG #7055—Origination Year 2049: Destination 2018]

It has taken almost a year to re-establish a secure transmission frequency.

At this time on your planet, I am known as Karen Palmer, a multidisciplinary immersive filmmaker. While I have been projected back into your time, my consciousness remains in my time and guides my current physical being. Therefore, I do not experience the world in quite the same way you do. As the Storyteller from the Future, I apprehend this reality beyond conventional perception. At risk of my safety, I feel that my responsibility is to enable you to experience that, too.

Imagine, if you will, that we are existing in the most realistic video game simulation known to (wo)man. What you perceive as yourself is a pre-selected avatar. Your operating system (let’s call it your “belief system”) is an amalgamation of your data — a.k.a your experiences, interpreted via your personal sensors, more commonly known as your eyes and ears.

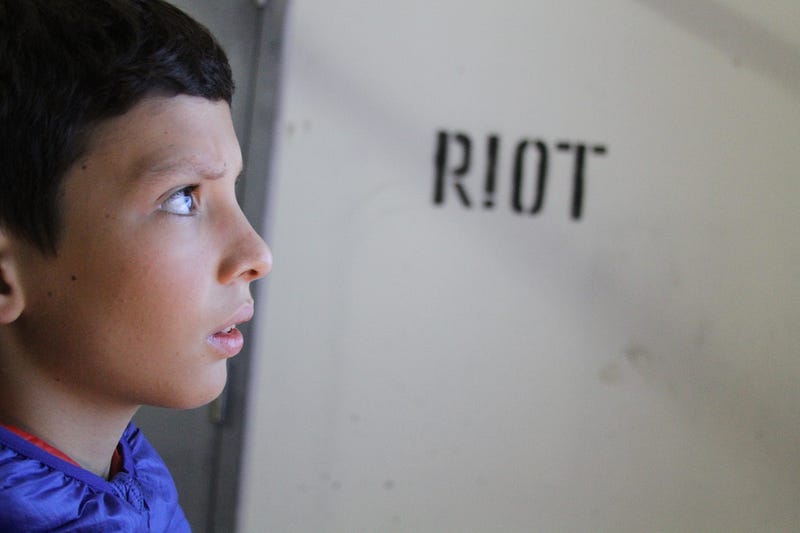

Now imagine that you could re-program and update your software to redefine your reality and hone your perception. This can be done and that’s why I created RIOT.

RIOT is an emotionally responsive film which uses facial recognition and artificial intelligence technology to enable players to navigate through a dangerous riot. You are confronted by a riot cop: Respond with fear and the film goes in one direction. Respond with anger and it goes in another. I have created this because in the time my body inhabits, most of the countries are now police states. I want you to know what it is going to feel like for you and your families to have a police presence everywhere, in the hope that it primes your subconscious to start to resist!

The RIOT sensory storytelling experience enables participants to become aware of their subconscious behaviour and enables them to consciously build new neurological pathways in their brains, to override automatic behaviourial responses and create new ones. The underlying architecture of the experience is to shift individual’s perception by rewiring their minds, and it’s working.

In 2016, my projected consciousness developed the RIOT prototype with Crossover Labs, Sheffield University for Festival of the Mind, The National Theatre Immersive Storytelling Studio and Dr. Hongying Meng, a Senior lecturer at Brunel University London’s Computer Science and Engineering Department.

The project was inspired by my own frustration as I watched the Ferguson riots unfold on social media, having seen yet another young black man murdered by the police. I wanted people to experience the untold story of real people in a situation who see no other option left open to them. To tell a story of not just being in a riot, but what has led to it and the intensity of fighting for injustice even when your very life is at stake. To make you feel your own fear and master it.

As a participant in the film, how you think you would respond may not be how you actually would. So, you could go back into the experience and consciously change your behaviour in order to build new neurological pathways in your brain. People have returned to participate in the film and responded differently based on their awareness of their subconscious behaviour.

Research in neuroscience and cognitive psychology has shown that stories are typically more effective than rational arguments at changing minds. That’s why we selected storytelling as the most obvious form to connect with your people at this time. In fact, the power of these new forms of art and tech are only becoming apparent. MIT has carried out research that confirms that your brain cannot decipher the difference between VR and reality.

I am part of this revolution in experiential storytelling. But don’t worry: I am one of the good guys.

Here, in a communication ritual you know as a “TEDx talk,” I explain more.

The current second iteration, RIOT 2, starts with an actor dressed as a riot police officer whose objective is to intimidate the participant. Brusque questions include, “Do you have any weapons on you?” and “Do you have anything that can be used as a weapon?” Answers to the second question have ranged from “This glass in my hand!” to “My mind!”

Through the

Look Glass. Photo Mikhail Grebenshchikov

Through the

Look Glass. Photo Mikhail GrebenshchikovThis opening experience is designed to engage your cognitive behaviour and imagination and to be gently provocative. Having been primed, you are then directed to enter into the RIOT installation quadrant. Then, as you’re watching the film in the installation, the AI watches you back through the webcam. The set design by Kinicho surrounds you in visceral 3D sound, debris from the aftermath of a violent disorder is strewn around and rubbish spills from an overturned dustbin. A veil of haze and the scent of smoke completes the ambience.

The RIOT 2 prototype has a Machine Learning algorithm that monitors you for the emotions of calm, anger, and fear. There are currently four levels: You come into contact with a riot cop, a looter, an activist, and a young woman being detained by the police. Your objective is to stay calm, make it home alive, and bring the community together.

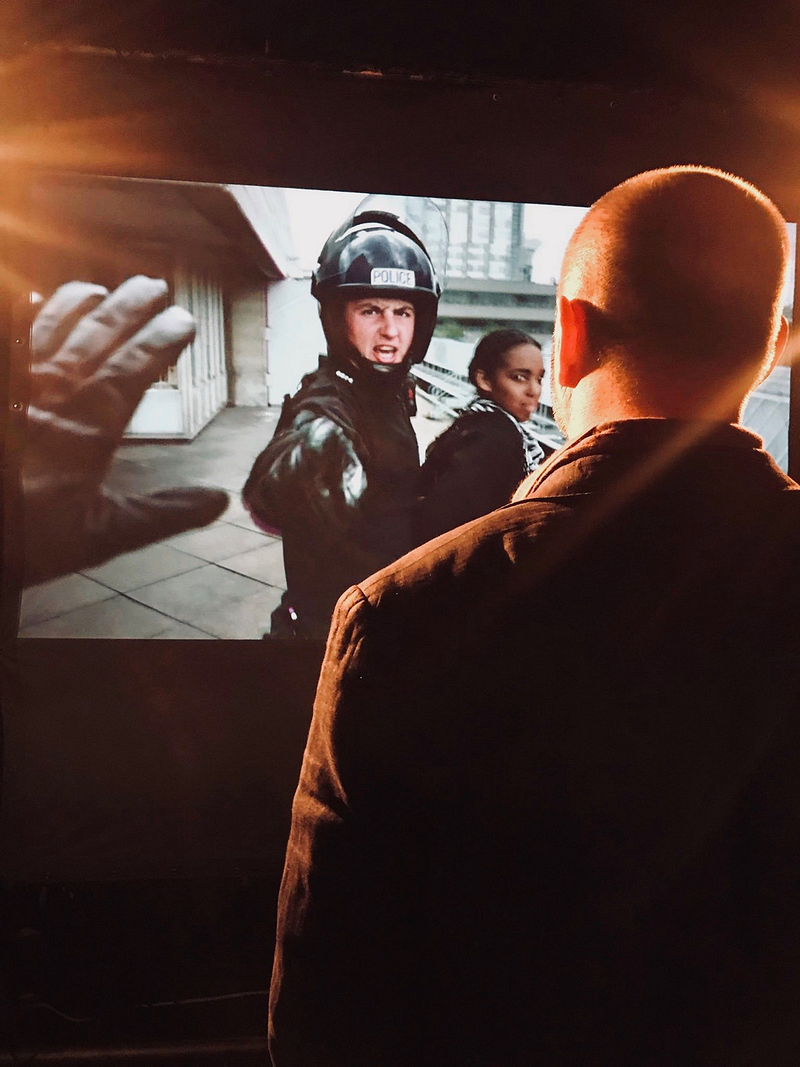

The RIOT

installation and participant

The RIOT

installation and participantThe response to RIOT 2 (still in development) has been exponential, largely due to the fact that it encompasses so many disciplines and technologies: film, gaming, A.I., machine learning, facial emotional detection, art, behavioural psychology, neuroscience, epigenetics, the parkour philosophy of moving through fear, and racial tension at this current time of intense global social unrest.

The RIOT “reality construct” seems to be making an impact in your time:

- The RIOT prototype was honoured as part of the Digital Dozen Breakthroughs in Storytelling 2017, in which Columbia University School of the Arts’ Digital Storytelling Lab acknowledged the most innovative approaches to narrative from the past year.

- Articles about it have appeared in Forbes, CBS, Fast Company, Engadget, NBC and The Guardian, to name a few.

- I have exhibited RIOT at the prestigious Future of Storytelling Festival in NYC, at the V&A in London, and at PHI Centre’s Sensory Stories Exhibition in Montreal.

- I was final keynote speaker at ZKM The AI Conference: Living amongst Intelligent Machines” in Germany and at Silbersalz “Future, Media and Science” in Halle Germany too.

- I’ve spoken at the FoST Global Summit and the Strategic Storytelling Seminar NY, the Google Cultural Institute Lab in Paris, CPH:DOX, Copenhagen International Documentary Film Festival, and Art, Technology & Change.

Speaking at

the communication ritual you know as a “TEDx talk” at the Sydney Opera House

Speaking at

the communication ritual you know as a “TEDx talk” at the Sydney Opera HouseIn September 2017, while continuing to develop the final RIOT iteration, I was accepted as a TED resident in New York. The TED Network functions like a community of underground resistance experts. While there, I expounded on the behavioural psychology and neuroscience research. Having spoken to many VR practitioners and curators, it appeared that the neurological impact of VR research came several years after the initial experiences. Therefore, I felt it essential to build the technology and the story alongside the neurological research into AI, in order to be fully aware of the technologies’ implications and to create an intentional empowering experience for participants.

I have recently completed a tenure as an AI Artist in Residence at the ThoughtWorks technology company, where I was continuing to develop the RIOT technology and UX Experience.

You may be aware of ThoughtWorks as a global technology company that pioneered the Agile process. They have 5,000 employees in 14 countries. Being from the future, I recognise the ThoughtWorkers as a race of consciously evolved beings who reside on their current planet of choice, Earth. They divide their days harnessing the most powerful tool in your current culture, aka “technology,” and spend their evenings and weekends dedicated to social concerns of the planet.

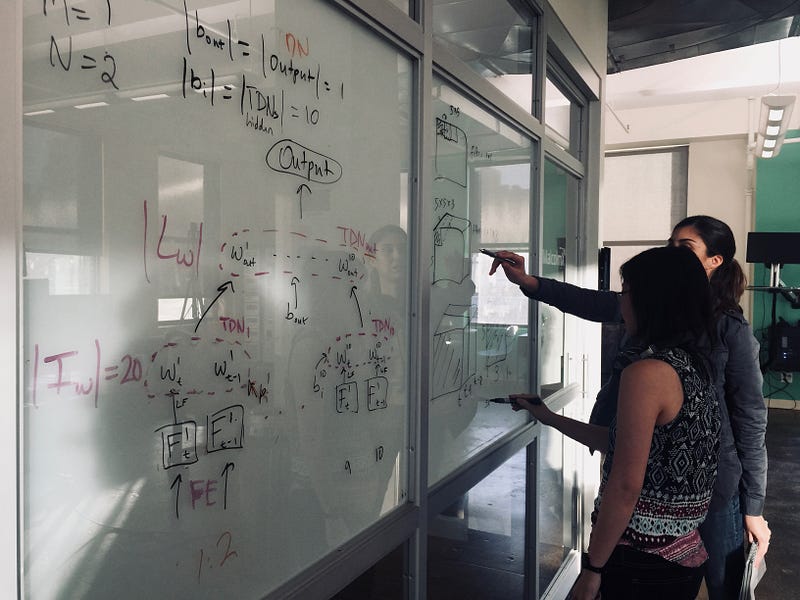

ThoughtWorkers channeling higher consciousness.

ThoughtWorkers channeling higher consciousness.The basis of the residency at ThoughtWorks was to develop the RIOT AI system and explore the potential for a multi-modality user experience for the final iteration. This meant building EmoPy, an open-source library for facial expression recognition that contains neural nets used to measure emotions during RIOT.

Watch the following two videos to learn more about my collaboration with the ThoughtWorkers. This first video invited volunteers to be part of the process to collate their expressions as the basis of the Neural Net:

The second video explains the process of how we built a Neural Net:

It was important to cultivate a thriving dialogue through a series of Artist Open Studio Sessions with an international community of artists, creatives, technologists, neuroscientists, policy advisors, festival curators, and academics. (Many of these conscious creators go on to become part of the media resistance movement in the future). This was all part of the RIOT development process to converge. 3D sound designer Garry Haywood wrote an exquisite article on the types of thoughts coming from these events, Inside Out: Emotion, Witness and Self-awareness from Immersive Experiences.

Artist Open

Studio Sessions/ Global Interdisciplinary meetup for conscious creators

Artist Open

Studio Sessions/ Global Interdisciplinary meetup for conscious creatorsWe also carried out extensive research in conjunction with my TED Residency into behavioural psychology in order to explore if there is a methodology to shift perception. This research was done in collaboration with Neuroscientist Elizabeth Waters and Emily Balcetis, a TED Speaker and behavioural psychology professor at NYU, (both known cosmic time travelers).

Emily’s lab, SPAM (which stands for Social Perception and Motivation), focuses on the “conscious and non-conscious” ways that people fundamentally orient to the world — how the motivations, emotions, needs, and goals people hold affect the basic ways they perceive, interpret, and ultimately react to the information around them. The lab’s work explores motivational biases in visual and social perception and the consequential effects for behaviour and navigation of the social world. In doing so, the lab’s research represents an intersection among social psychology, judgment and decision-making, social cognition, and perception.

Why is it important to guide your personal sensors (also referred to as your eyes and ears)? Behavioural research shows that the way viewers watch dash-cam footage of police brutality reflects their personal bias. If the viewer is empathetic towards the police, their eye-tracking follows the trajectory of the police. If the viewer is sympathetic to the person being brutalised, their eye tracking follows the trajectory of the person they perceive as the victim.

So I asked the SPAM Lab: “If we were able to encourage a participant to deviate from their predetermined eye-tracking through the storytelling experience, would we be able to change their bias or perspective?” They concluded this may be possible, and this is what we are now exploring. Often, people are looking at only one part of the screen. Through RIOT, the aim is to direct people’s gaze so they see the whole story.

I am experimenting with shifting participants’ perspective through having them access multiple narratives from different characters’ perspectives. This will inform the next stage of my R&D into racial perspective disparity in America at this particularly turbulent time of racial divide.

The reality of the disparity of the black experience by white and black people is more evident now than ever, as white people attempt to use logic to make sense of the consistent violent incidents against young black men. These consist of #SittingWhileBlack at Starbucks, #NappingWhileBlack at Yale, or #CookingoutwhileBlack in the park, all offenses that have caused white folks to call police. A shift in perception is required to tackle this form of racism, now more than ever.

ThoughtWorkers creating a Machine Learning Algorithm undetected.

ThoughtWorkers creating a Machine Learning Algorithm undetected.As part of the research done into AI at ThoughtWorks, we have come to the conclusion that all AI is biased, because it is trained on data generated or labeled by humans, who are inherently biased. So we asked ourselves, as part of the objective to shift perception: Do we want to intentionally load the machine-learning algorithm with bias? This could allow participants to experience RIOT from the perspective of being a white woman or black male to see how their experiences would differ.

This will be explored further in the next phase of development. Your future! My past!

In the next and final phase of RIOT we’ll be introducing what’s called “multi-modalities,” i.e. further functionality to measure more sensors. For example, as part of my ThoughtWorks residency we researched voice emotional recognition, which would allow the participant to speak to the film and have the narrative branch in different directions depending on the emotion detected.

I deliberately did not use wearable technology because I want people to step into the “world” of the experience and feel that they are affecting the world in the same way when they step outside. This experience reveals the larger truth of the holographic universe that we’ve come to understand in the future — that we’re all inhabiting a high-res simulation that our brains translate back into what we experience as reality.

RIOT

participant inside the reality construct installation @ FoST

RIOT

participant inside the reality construct installation @ FoSTI conclude this transmission with an invitation: We are seeking more partners in the Resistance!

As your world moves closer to my future, RIOT will continue to evolve as a tool to help humans better apprehend and control their relationship with provocative stimuli and coercive media. As part of the next phase of storytelling development, I will be interviewing people that have been in riots to develop a documentary-based script to produce the new RIOT film. This will be done in collaboration with the National Theatre Immersive Storytelling Studio and its in-house screenwriter.

In addition, we are currently seeking partners/funders/resources to complete the final iteration of this sensory story experience. To contact us please resonate your energy towards our frequency, or use more conventional modes of communication.

I close this transmission as I started, making you aware of the impending revolution of technology, so you are prepared and enabled. As a tool in your struggle, we leave you a gift: We are releasing to you, the people, a derivation of our own Emo Py A.I. Neural Net. The system has been made open source in order to provide free access beyond existing closed-box commercial implementations, both widening access and encouraging debate. Use it well!

Many of you are already part of this invisible movement, so exclusive that you do not even know you are in it. We know you are out there and waiting. This is a beacon in the darkness in this storytelling revolution with art and technology.

Hear this call to join us! Your future needs you! The time has come!

[End transmission]

Read the response

From the Storyteller from the Present to the Storyteller from the Future by Eliza Capai

Immerse is an initiative of the MIT Open DocLab and The Fledgling Fund, and is fiscally sponsored by IFP. Learn more about our vision for the project here.